Market conditions are becoming more challenging as businesses attempt to scale. AI technologies need to be integrated for better operational efficiency. As of 2024, 72% of organizations have incorporated AI and LLM into at least one business function, significantly increasing from 55% the previous year.

Meanwhile, companies stuck with outdated systems struggle to keep up, falling behind their more innovative competitors. For CTOs, AI engineers, and product managers, staying competitive means addressing this challenge head-on.

A Generative AI Tech Stack is the foundation of any system designed to create new, innovative content, such as text, images, or music. Its core purpose is to drive creativity, automation, and efficiency—but only if it is optimized correctly.

Success requires selecting the right combination of tools, frameworks, and infrastructure. Each component must work in harmony to deliver cost-effective and high-performance results. As of January 15, 2025, understanding these foundational elements is crucial for maintaining a competitive edge in this rapidly evolving field.

Why Optimization Matters

Optimization is required in all businesses to improve outdated systems and make them more proficient. A well-designed tech stack enhances performance, guarantees scalability, and minimizes costs. Consider industries like healthcare, where AI is incorporated in diagnostics, or media, which automates content creation. An unoptimized system can lead to high latency, excessive costs, and poor scalability, making it more of a liability than an asset.

Let’s learn how to build and optimize a generative AI tech stack that ensures success.

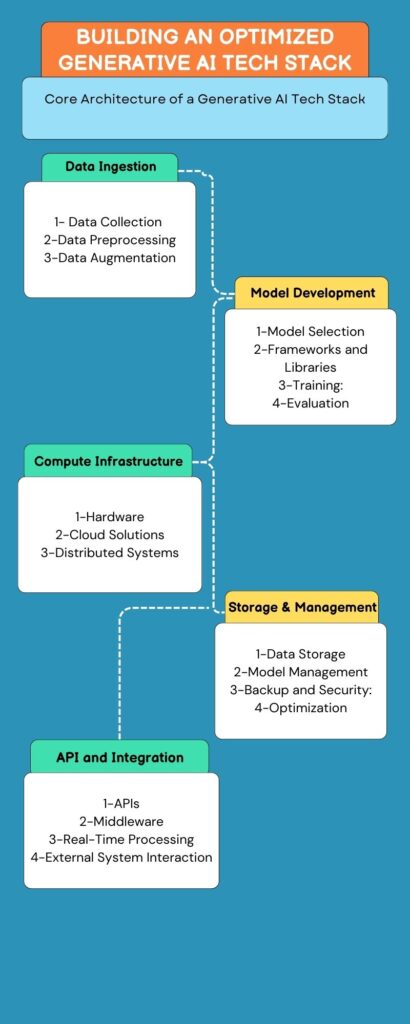

Core Architecture of a Generative AI Tech Stack

Building a robust generative AI system starts with understanding its foundational components.

Data Ingestion

Gathering and preprocessing data from various sources is the first step. It’s critical to ensure the data is high-quality and diverse for training effective generative models.

Model Development

This involves designing and training AI models capable of creating new content. Selecting the right algorithms and frameworks is key to achieving optimal performance.

Compute Infrastructure

A powerful and scalable infrastructure is necessary for training large AI models. Cloud-based solutions, GPUs, and distributed systems are commonly used to handle computational demands.

Storage and Management

Efficient data storage and management systems ensure easy access to training data and models. Scalability and reliability are essential to handle growing datasets and model versions.

API and Integration

APIs facilitate the easy integration of AI models with applications, enabling real-time performance and ensuring the tech stack supports external system interaction.

Key Tools and Technologies for Generative AI Development

The right tools can make or break your tech stack.

Data Handling Tools

- Cleaning: Pandas, PySpark—to refine raw datasets.

- Annotation: Label Studio, Scale AI—for creating labeled datasets.

- Augmentation: Albumentations (images), TextAttack (text).

Model Training Frameworks

- Deep Learning: TensorFlow and PyTorch for building powerful neural networks.

- Reinforcement Learning: Stable Baselines3 for decision-making tasks.

Deployment Tools

- Experiment Tracking: MLflow to track model versions and experiments.

- Model Serving: TensorFlow Serving, TorchServe for real-time inference.

Optimization Techniques

- Model Compression: Techniques like quantization, pruning, and distillation to improve efficiency.

Building Blocks of a Scalable Generative AI System

Scalability ensures your system adapts to growing demands without spiraling costs.

Modular Design

Decoupling components allows for flexibility and easier upgrades.

Example: A modular framework can let you swap out the data processing module without affecting the entire system.

Parallel Processing and Distributed Training

Training large models often requires splitting tasks across multiple GPUs.

Tools to consider:

- Horovod: Simplifies distributed training.

- Ray: Automates resource allocation for parallel tasks.

Cost-Efficient Solutions

- Use smaller models like GPT-NeoX and LLaMA for specific tasks.

- Leverage spot instances and cloud credits to reduce costs.

Optimizing Performance in a Generative AI Tech Stack

Performance optimization ensures your system delivers results quickly and efficiently.

Balancing Latency and Throughput

Real-time applications demand low latency for fast responses, while batch processing prioritizes throughput to handle large datasets efficiently. Adjusting configurations based on the use case ensures optimal performance for both scenarios, balancing speed and volume effectively.

Data Pipeline Optimization

Speed up the ETL process using tools like Apache Beam and Kafka, which ensure smooth data flow and reduce bottlenecks. Optimizing the pipeline helps in faster data preprocessing, which is critical for efficient model training and inference.

Fine-Tuning Models for Efficiency

Techniques like LoRA and adapters focus on optimizing only specific parts of the model, reducing the resources needed during fine-tuning. This results in faster, more efficient training without compromising the model’s performance.

Leveraging Caching and Preprocessing

Caching repeated inference results can drastically reduce computation time on subsequent requests. Preprocessing data before feeding it into the model also ensures quicker inference, reducing system load and improving overall response time.

Building an Optimized Generative AI Tech Stack with Folio3

At Folio3, we specialize in creating tailored, generative AI solutions that transform businesses. From data management to model deployment, our expertise ensures your tech stack is optimized for performance, scalability, and cost-efficiency.

By partnering with Folio3, you gain access to:

- Industry-leading tools and frameworks.

- Bespoke solutions designed for your unique needs.

- Continuous support and monitoring to keep your systems running smoothly.

FAQs

1. What Is a Generative AI Tech Stack?

A generative AI tech stack is a set of technologies, tools, and processes designed to develop, train, and deploy AI models that generate new content, such as text, images, or audio.

2. Why Is Optimization Important in a Generative AI Tech Stack?

Optimization improves performance, reduces costs, and ensures scalability, making your system efficient and ready for real-world applications.

3. What Are the Key Components of a Generative AI Tech Stack?

The key components include the data layer, model development and training layer, compute infrastructure, integration and deployment layer, and monitoring and feedback layer.

4. What Tools Are Essential for Generative AI Development?

Essential tools include TensorFlow, PyTorch, Apache Hadoop, Snowflake, Docker, Kubernetes, and MLflow.

5. How Can I Make My Generative AI System Scalable?

Use modular design, parallel processing tools like Horovod, and cost-efficient solutions like smaller models and cloud credits.

Manahil Samuel holds a Bachelor’s in Computer Science and has worked on artificial intelligence and computer vision She skillfully combines her technical expertise with digital marketing strategies, utilizing AI-driven insights for precise and impactful content. Her work embodies a distinctive fusion of technology and storytelling, exemplifying her keen grasp of contemporary AI market standards.