In the age of digital transformation, images are ubiquitous . However, efficiently organizing, retrieving, and processing vast amounts of visual data poses significant challenges. Image retrieval systems, which aim to find relevant images from large databases based on a query image or keyword, play a pivotal role in addressing these challenges.

At the heart of these systems lies feature extraction and feature matching—two foundational techniques that determine their performance and accuracy.

This blog delves into the intricacies of feature extraction and matching, their key techniques, and how they contribute to modern image retrieval systems.

Introduction to Feature Extraction and Matching

What Are Features?

In image processing, features are distinct attributes or patterns within an image that are used to describe and distinguish it from other images. Features could range from simple characteristics, such as edges and corners, to complex patterns like textures and shapes. Features are broadly categorized into two types:

- Low-Level Features: Basic characteristics like color, texture, and shape.

- High-Level Features: Semantic attributes such as objects or concepts within an image.

Why Are Features Important?

In image retrieval, the ability to represent an image using its key features allows for:

- Efficient Comparison: By representing images as feature vectors, databases can compare images faster than using raw pixel data.

Robustness: Well-chosen features make the system resilient to variations in scale, orientation, and illumination.

Feature Extraction: The First Step

Feature extraction is the process of identifying and describing the relevant features of an image. The goal is to reduce the dimensionality of the data while preserving its important characteristics.

1. Global vs. Local Features

- Global Features: These describe the image as a whole. Examples include color histograms and shape descriptors.

- Local Features: These focus on specific regions or keypoints in the image. Examples include corners, edges, or blobs.

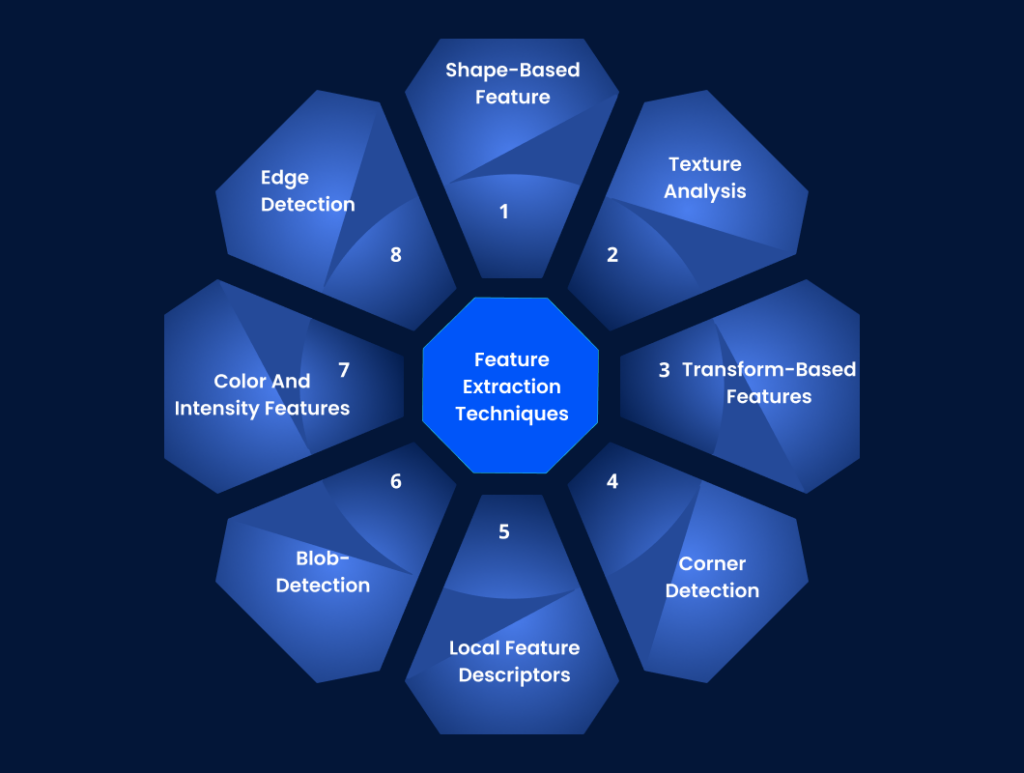

2. Key Feature Extraction Techniques

a) Color Features

Color is a fundamental visual cue, and several techniques have been developed to leverage it:

- Color Histograms: Represent the distribution of colors in an image. While simple, they are sensitive to lighting changes.

- Color Moments: Summarize the statistical distribution of colors, offering a compact representation.

- Color Correlograms: Capture the spatial correlation of colors, making them more robust to spatial transformations.

b) Texture Features

Texture describes patterns or repetitions in pixel intensities, often representing surface properties. Popular texture extraction methods include:

- Gray Level Co-occurrence Matrix (GLCM): Captures the spatial relationship between pixel intensities.

- Local Binary Patterns (LBP): Encodes local texture patterns into binary descriptors.

- Gabor Filters: Analyze texture by decomposing the image into different frequency and orientation components.

c) Shape Features

Shape descriptors are crucial when identifying objects or contours in an image. Techniques include:

- Contour-Based Descriptors: Such as Fourier descriptors, which represent shapes using boundary information.

- Region-Based Descriptors: Like Zernike moments, which capture the global shape characteristics.

d) Keypoint Detection

Keypoints are distinctive points in an image that remain stable under transformations. Popular keypoint detection techniques include:

- Harris Corner Detector: Identifies corners in an image.

- Difference of Gaussian (DoG): Used in scale-invariant feature transform (SIFT) to detect stable keypoints at multiple scales.

Feature Matching: Connecting the Dots

Once features are extracted, the next step is to match them between images. Feature matching involves finding correspondences between the feature sets of two images to determine similarity.

1. Matching Strategies

Feature matching can be broadly categorized into:

- Exact Matching: Suitable for discrete features like binary descriptors.

- Approximate Matching: Used for continuous feature representations like SIFT or HOG descriptors.

2. Distance Metrics for Matching

Feature matching relies on calculating a similarity score between feature vectors. Common distance metrics include:

- Euclidean Distance: Measures the straight-line distance between two vectors.

- Cosine Similarity: Measures the angle between two vectors, focusing on their direction rather than magnitude.

- Hamming Distance: Used for binary feature vectors like ORB descriptors.

3. Key Feature Matching Algorithms

a) Brute-Force Matching

This straightforward method compares every feature in one image with every feature in another image. While accurate, it is computationally expensive for large datasets.

b) k-Nearest Neighbors (k-NN)

This method finds the k closest features for each feature in the query image. It is often paired with ratio tests to eliminate ambiguous matches.

c) FLANN (Fast Library for Approximate Nearest Neighbors)

FLANN accelerates the matching process by approximating nearest neighbors. It is particularly useful for large-scale image datasets.

d) RANSAC (Random Sample Consensus)

RANSAC refines feature matches by estimating the geometric transformation between images. It helps reject outliers and improves robustness.

Modern Approaches: Deep Learning for Feature Extraction and Matching

With the rise of deep learning, handcrafted features have given way to features learned directly from data.

1. Convolutional Neural Networks (CNNs)

CNNs have revolutionized feature extraction by automatically learning hierarchical features from raw images. Key layers include:

- Convolutional Layers: Extract spatial features like edges or textures.

- Pooling Layers: Downsample features for computational efficiency.

- Fully Connected Layers: Aggregate extracted features into a compact representation.

2. Deep Feature Matching

Deep learning-based feature matching leverages architectures like Siamese networks and Triplet networks to learn feature embeddings optimized for similarity comparison.

3. Pre-trained Models

Pre-trained models like VGG, ResNet, and EfficientNet are often used as feature extractors in image retrieval tasks. These models are fine-tuned on specific datasets to extract domain-relevant features.

Applications of Feature Extraction and Matching in Image Retrieval

1. Content-Based Image Retrieval (CBIR)

CBIR systems rely heavily on feature extraction and matching to find visually similar images. Applications include:

- Medical imaging for diagnosing diseases.

- E-commerce platforms for finding visually similar products.

2. Facial Recognition

Facial recognition systems use deep features for robust face matching, even under challenging conditions like varying lighting or occlusions.

3. Object Recognition

Applications like autonomous vehicles and surveillance systems use feature matching to recognize and track objects in dynamic environments.

4. Augmented Reality

AR systems use feature matching to align virtual objects with real-world scenes accurately.

Challenges in Feature Extraction and Matching

Despite their utility, feature extraction and matching face several challenges:

- Scale and Orientation Variations: Ensuring features remain invariant under transformations.

- Illumination Changes: Robustness to lighting variations is critical.

- Large-Scale Datasets: Scaling feature matching for databases containing millions of images.

Real-Time Processing: Balancing accuracy with computational efficiency for real-time applications.

Future Trends

The field of image retrieval is evolving rapidly. Some emerging trends include:

- Self-Supervised Learning: Techniques that learn features without labeled data are gaining traction.

- Transformers for Vision: Vision transformers (ViTs) are being explored for feature extraction.

Cross-Modal Retrieval: Systems that bridge the gap between images and text for more flexible search capabilities.

Conclusion

Feature extraction and matching are the cornerstones of image retrieval systems, enabling efficient and accurate retrieval of visual data. From traditional handcrafted features to modern deep learning-based methods, these techniques have come a long way in addressing the challenges of large-scale visual search.

As technology continues to advance, we can expect even more robust and intelligent systems capable of handling complex real-world scenarios.

Manahil Samuel holds a Bachelor’s in Computer Science and has worked on artificial intelligence and computer vision She skillfully combines her technical expertise with digital marketing strategies, utilizing AI-driven insights for precise and impactful content. Her work embodies a distinctive fusion of technology and storytelling, exemplifying her keen grasp of contemporary AI market standards.