Generative AI adoption has accelerated rapidly, with research showing that most organizations are now using generative AI in at least one business function. However, only a few have successfully scaled implementations beyond pilot phases. This gap reveals a critical truth: successful generative AI implementation requires strategic planning, technical expertise, and a thorough understanding of both opportunities and pitfalls.

This guide provides everything you need to bridge that gap. Whether you’re evaluating your first generative AI project or scaling existing initiatives, you’ll discover practical frameworks for building and implementing a generative AI model, addressing implementation challenges, and creating sustainable competitive advantages.

Key takeaways

- Strategic Implementation Beats Random Experimentation: Organizations that follow structured implementation frameworks are three times more likely to achieve production-scale success than those that use ad hoc approaches.

- Data Quality Determines Model Success: 80% of generative AI project success depends on data quality and preparation rather than model architecture selection.

- Start Small, Scale Smart: Begin with narrow, high-impact use cases demonstrating clear ROI before expanding to complex, enterprise-wide implementations.

- Build vs. Buy Requires Strategic Analysis: The decision between custom development, fine-tuning existing models, or using APIs should be based on specific use case requirements, not technology preferences.

- Continuous Monitoring Prevents Model Drift: Successful implementations include robust monitoring systems that track model performance, bias, and output quality over time to maintain reliability.

We’ll start by exploring the fundamental concepts, architectures, and capabilities that set generative AI apart from traditional machine learning approaches, providing the foundation for effective implementation.

Need a custom Generative AI solution tailored to your business needs? Explore our Generative AI Services

What is generative AI?

Generative AI represents a fundamental departure from traditional artificial intelligence approaches. While conventional AI systems excel at classification, prediction, and pattern recognition, generative AI creates new content by learning the underlying patterns and structures of training data. For example, generative AI can create entirely new cat images that never existed before, instead of simply recognizing that an image contains a cat.

According to Statista Market Forecast, the generative AI market is projected to reach US$62.72bn in 2025, with an expected annual growth rate (CAGR 2025-2030) of 41.53%, resulting in a market volume of US$356.10bn by 2030, demonstrating the rapid adoption and transformative potential of this technology.

The key distinction lies in capability and purpose. Traditional AI focuses on understanding and categorizing existing information, while generative AI goes a step further by producing original outputs. This fundamental shift opens up possibilities for creative applications that were previously impossible with conventional AI systems.

This creative capability emerges from sophisticated neural network architectures that learn to model complex data distributions, enabling them to generate novel and contextually appropriate outputs.

Common types of generative AI

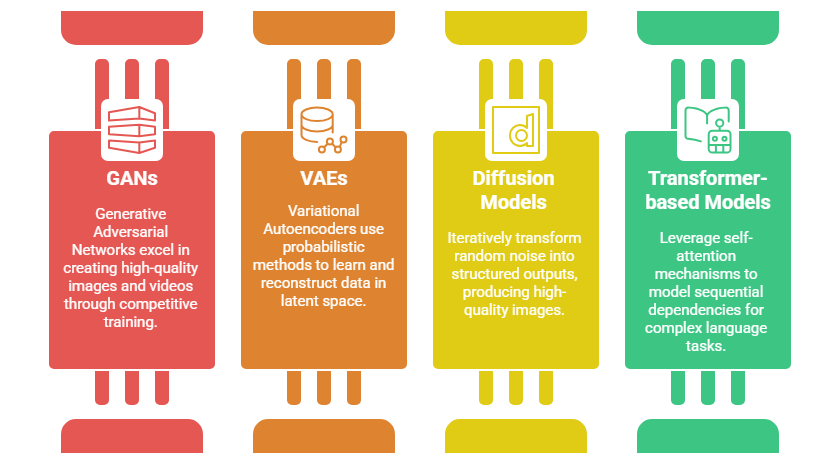

Understanding the four primary generative AI architectures helps you select the most appropriate technology for your specific use case and implementation requirements.

GANs (generative adversarial networks)

Two neural networks compete in an adversarial training process where a generator creates synthetic data while a discriminator tries to identify fake content. This competitive dynamic drives continuous improvement until the generator produces outputs indistinguishable from real data.

GANs excel at creating exceptionally high-quality images and videos, making them ideal for creative applications, photo editing, synthetic media generation, and style transfer. However, they can be challenging to train due to potential instability issues and mode collapse problems.

VAEs (variational autoencoders)

These models use probabilistic approaches to learn compressed data representations in latent space, essentially creating a mathematical map of essential features. Unlike GANs, VAEs focus on understanding the underlying data structure by encoding information into a lower-dimensional space and reconstructing it.

This architecture enables controlled generation by manipulating latent variables, providing interpretable generation processes with stable training characteristics. VAEs are particularly valuable for applications requiring precise attribute control, such as molecular design, facial feature manipulation, and data compression tasks.

Diffusion models

Generate content through iterative denoising processes, gradually transforming random noise into structured outputs over multiple steps, similar to developing a photograph in a darkroom. The process works by learning to reverse noise-adding procedures, allowing precise control over generation quality and attributes.

Diffusion models produce the highest quality images with superior controllability and stable training characteristics. They have revolutionized text-to-image generation, artistic creation, detailed image editing, and architectural visualization, though they require more computational resources and longer generation times compared to other approaches.

Transformer-based models (e.g., GPT, LLaMA)

Leverage self-attention mechanisms to model sequential dependencies, allowing models to focus on relevant parts of input sequences when generating new content. The self-attention mechanism captures relationships between elements regardless of distance, making transformers ideal for complex language tasks requiring context understanding.

These versatile architectures power large language models like GPT and LLaMA, enabling natural language processing, code generation, document analysis, and cross-modal understanding capabilities. Transformers can process sequences of any length and maintain coherent generation over extended outputs, though they require substantial computational resources and extensive training data.

Applications and use cases of generative AI

Understanding where generative AI creates the most value helps prioritize implementation efforts and maximize return on investment. Let’s explore the most impactful applications across industries:

1. Text generation

Text generation applications transform how organizations create written content, from marketing materials to technical documentation, enabling faster production and consistent quality across all communication channels.

Content creation and marketing

- Generate automated blog posts, product descriptions, and marketing copy that maintains brand voice while scaling content production.

- Create personalized email campaigns and social media content tailored to specific audience segments and customer preferences.

- Develop comprehensive technical documentation and user guides that explain complex processes in an accessible language.

Business communications

- Generate meeting summaries and action item lists that capture key decisions and next steps for improved team coordination.

- Create customer service responses and chatbot conversations that provide consistent, helpful information across all touchpoints.

- Draft professional proposals and contracts with relevant legal language and specific project requirements.

2. Image and video generation

Image and video generation capabilities enable organizations to create high-quality visual content without traditional design resources, transforming creative workflows and significantly reducing production costs.

Creative and design applications

- Create brand assets and logo designs that align with the company’s visual identity and marketing requirements.

- Generate product visualizations and prototypes that help stakeholders understand concepts before physical development begins.

- Produce architectural renderings and concept art that communicate design ideas with photorealistic quality and detail.

Marketing and advertising

- Develop personalized visual content for campaigns that resonate with specific demographic groups and market segments.

- Create product photography alternatives that showcase items in various settings without expensive photo shoots.

- Generate video content for social media platforms that engages audiences with dynamic, eye-catching visuals.

Technical applications

- Generate synthetic training data for computer vision models when real-world data is limited or expensive to collect.

- Enhance medical imaging for research and training purposes while maintaining patient privacy and data security.

- Improve satellite imagery enhancement for better analysis of geographic and environmental changes over time.

3. Code generation

Code generation tools accelerate software development by automating routine programming tasks. This enables developers to focus on complex problem-solving while maintaining code quality and consistency standards.

Development acceleration

- Provide automated code completion and suggestions that help developers write more efficient code with fewer errors.

- Generate comprehensive test cases and documentation that ensure code reliability and make maintenance easier.

- Offer bug detection and fixing assistance that identifies potential issues before they impact production systems.

Legacy system modernization

- Translate code between different programming languages while preserving functionality and improving performance characteristics.

- Assist with architecture migration by analyzing existing systems and suggesting modernization approaches.

- Generate documentation for existing codebases that lack proper commenting or technical specifications.

4. Healthcare & life sciences

Healthcare applications focus on accelerating research, improving patient care, and ensuring regulatory compliance while maintaining the highest standards for accuracy and safety.

Drug discovery and research

- Generate molecular structures for drug candidates that show promise for specific therapeutic targets and disease mechanisms.

- Predict protein folding patterns and analyze their implications for drug development and biological research.

- Optimize clinical trial designs that improve success rates while reducing costs and timeline requirements.

Patient care and diagnostics

- Create personalized treatment plans that consider individual patient characteristics, medical history, and current best practices.

- Enhance medical imaging analysis that helps healthcare providers identify conditions more accurately and quickly.

- Generate clinical notes and summaries that reduce administrative burden while maintaining comprehensive patient records.

Regulatory and compliance

- Automate regulatory document generation that meets strict industry standards and submission requirements.

- Create clinical trial reports that compile complex data into coherent, compliant documentation.

- Generate adverse event reports and analyses that support drug safety monitoring and regulatory oversight.

5. Gaming & metaverse

Gaming and virtual world applications enhance player experiences through dynamic content creation, personalized interactions, and immersive environments that adapt to individual preferences and behaviors.

Content creation

- Generate procedural worlds and levels that provide unique gaming experiences while reducing development time and costs.

- Create character designs and animations that bring diverse personalities and stories to life within game environments.

- Develop narrative content and dialogue that adapts to player choices and creates branching storylines.

Player experience enhancement

- Deliver personalized gaming experiences that adapt to individual player preferences, skill levels, and playing styles.

- Implement a dynamic difficulty adjustment that maintains engagement by responding to player performance in real-time.

- Enable real-time content adaptation that keeps games fresh and challenging for long-term player retention.

Virtual environments

- Build comprehensive metaverse worlds that support social interaction, commerce, and entertainment activities.

- Generate avatar customization options that allow users to express their identity and creativity in virtual spaces.

- Create virtual events and experiences that bring people together for entertainment, education, and business purposes.

6. Data augmentation

Data augmentation addresses the challenge of insufficient training data by generating synthetic examples that improve machine learning model performance and generalization capabilities.

Machine learning enhancement

- Generate synthetic training data that fills gaps in datasets and improves model performance across diverse scenarios.

- Simulate rare events and edge cases that are difficult to capture in real-world data collection efforts.

- Improve data diversity by creating variations that help models generalize to new, unseen situations.

Quality assurance

- Generate detailed test scenarios that cover edge cases and unusual conditions that might not occur naturally

- Identify potential failure modes and system vulnerabilities through systematic scenario generation and testing.

- Create performance testing data that simulates various load conditions and user behavior patterns.

7. Finance

Financial applications focus on risk management, customer service enhancement, and regulatory compliance while maintaining the security and accuracy required in financial services.

Risk management and analysis

- Generate synthetic financial data for stress testing that helps institutions understand potential vulnerabilities without exposing real customer information.

- Create market scenario models that help financial professionals prepare for various economic conditions and market fluctuations.

- Enhance credit risk assessment by generating additional data points and scenarios for more comprehensive evaluation.

Customer experience

- Generate personalized financial advice that considers individual circumstances, goals, and risk tolerance levels.

- Create automated reports summarizing complex financial information in clear, actionable formats for clients.

- Synthesize investment research from multiple sources to provide a comprehensive analysis and recommendations.

Regulatory and compliance

- Automate compliance reporting that ensures accurate, timely submission of required regulatory documentation

- Generate risk assessment documentation that demonstrates adherence to industry standards and regulatory requirements

- Assist with regulatory filing preparation that meets complex submission requirements and deadlines

8. Education

Educational applications personalize learning experiences and streamline administrative tasks, enabling educators to focus on teaching while providing students with customized learning paths.

Personalized learning

- Generate customized curriculum and lesson plans that adapt to individual student learning styles, pace, and interests.

- Create student assessments and feedback that provide meaningful evaluation while supporting continued learning and improvement.

- Optimize learning paths by analyzing student progress and suggesting appropriate next steps and resources.

Administrative efficiency

- Automate grading and feedback processes that provide consistent evaluation while reducing teacher workload and administrative burden.

- Generate student progress reports that communicate achievement and areas for improvement to students, parents, and administrators.

- Create administrative documentation that streamlines school operations and maintains accurate records.

9. Marketing & customer experience

Marketing applications enable hyper-personalization at scale while enhancing customer support capabilities, creating more engaging and responsive customer relationships across all touchpoints.

Personalization at scale

- Create dynamic content that adapts to user behavior, preferences, and interaction history for more relevant experiences.

- Generate personalized product recommendations and descriptions that increase engagement and conversion rates.

- Develop targeted campaign messaging that resonates with specific audience segments and drives desired actions.

Customer support enhancement

- Deploy intelligent chatbots and virtual assistants that provide accurate, helpful responses while maintaining conversational quality.

- Generate automated responses that address common customer inquiries quickly and consistently across all support channels.

- Create and maintain comprehensive knowledge bases that provide accurate, up-to-date information for customer self-service.

10. Legal & compliance

Legal applications streamline document creation, enhance research capabilities, and support compliance efforts while maintaining the accuracy and precision required in legal practice.

Document generation and analysis

- Assist with contract drafting and review by generating standard clauses and identifying potential issues or missing elements.

- Support legal research and case analysis by synthesizing relevant precedents, statutes, and legal commentary.

- Create compliance documentation that ensures adherence to regulatory requirements and industry standards.

Risk assessment

- Evaluate legal risks by analyzing contracts, business practices, and regulatory requirements for potential compliance issues.

- Generate compliance monitoring reports that track adherence to legal requirements and identify areas needing attention.

- Create policy documents that clearly communicate legal requirements and best practices to employees and stakeholders.

See how companies across industries are integrating Generative AI to automate operations and drive ROI. Read Generative AI Use Cases Across Industries

Business considerations before implementation

Establishing a solid business foundation before diving into technical implementation is crucial for generative AI implementation success. This phase determines whether your AI initiative drives meaningful value or becomes an expensive experiment.

Identifying use cases and ROI

Establishing a solid business foundation before diving into technical implementation is crucial for generative AI implementation success. This phase determines whether your AI initiative drives meaningful value or becomes an expensive experiment.

Strategic use case identification framework

Start with an in-depth audit of your organization’s pain points and opportunities. The most successful implementations target specific, measurable problems rather than broad, aspirational goals.

- Repetitive, time-consuming tasks that require creativity or content generation

- Processes with clear input-output relationships and measurable success criteria

- Activities where human expertise is scarce or expensive, but patterns exist in historical data

- Customer-facing functions with personalization opportunities

- Content creation bottlenecks are limiting business growth

ROI calculation framework:

Understanding both cost savings and revenue opportunities helps justify implementation investments and set realistic performance expectations.

Direct cost savings:

- Labor cost reduction from automation

- Time-to-market acceleration benefits

- Error reduction and quality improvement savings

- Resource optimization and efficiency gains

Revenue generation opportunities:

- New product and service capabilities

- Enhanced customer personalization and engagement

- Market expansion through scalable content creation

- Competitive differentiation advantages

Unsure which use case to prioritize? Our AI consultants can help define the right ROI-based roadmap. Schedule a Free AI Consultation

Feasibility assessment: data availability and domain complexity

Evaluating data readiness and implementation complexity helps set realistic timelines and resource requirements for successful generative AI deployment across different organizational contexts.

Data readiness evaluation

Generative AI quality directly correlates with data quality and availability. Before proceeding, conduct a thorough data audit covering volume requirements and quality standards.

Data volume requirements:

- Text generation: 10,000+ high-quality examples for fine-tuning

- Image generation: 1,000+ images for specific style transfer, 10,000+ for general generation

- Code generation: 1,000+ well-documented code examples

- Multimodal applications: Significantly higher requirements across all modalities

Data quality checklist:

- Accuracy: Information is correct and up-to-date

- Completeness: Minimal missing values or incomplete records

- Consistency: Standardized formats and conventions

- Relevance: Data represents the target domain and use case

- Diversity: Sufficient variety to prevent bias and ensure generalization

- Freshness: Recent data that reflects current patterns and trends

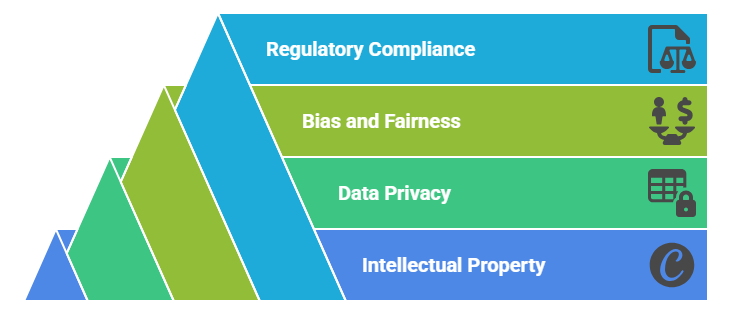

Legal, ethical, and compliance concerns

Addressing legal frameworks, ethical considerations, and compliance requirements is essential for responsible generative AI implementation that protects organizations from regulatory and reputational risks.

Intellectual property and copyright

Understanding IP rights, content ownership, and copyright implications prevents legal disputes and ensures compliance with training data and generated outputs.

- Training data licensing: Verify licensing terms and obtain necessary permissions for all training data. Document data sources and maintain records to demonstrate compliance during audits.

- Generated content ownership: Establish clear policies about who owns AI-generated content in different contexts. Consider implications for client work, employee creations, and commercial use scenarios.

- Third-party IP protection: Implement content filtering and similarity checking to avoid copyright infringement. Establish legal review processes for generated outputs in sensitive applications.

- Attribution requirements: Research disclosure obligations for AI-generated content in your industry and jurisdiction. Implement clear labeling systems where transparency is required.

Data privacy and protection

Protecting personal data and ensuring privacy compliance throughout the AI lifecycle requires comprehensive policies covering collection, processing, storage, and deletion procedures.

- Data minimization and purpose limitation: Collect only necessary data for stated AI purposes and implement strict usage controls. Establish regular audits to prevent scope creep in data usage.

- Consent management and right to erasure: Obtain appropriate consent with clear withdrawal mechanisms and maintain detailed records. Implement robust deletion capabilities for individual data removal requests.

- Cross-border transfers and GDPR compliance: Ensure international data transfer compliance through appropriate safeguards and adequacy decisions. Maintain documentation for regulatory examinations.

- Access controls and encryption: Implement strict access controls, data encryption, and audit trails. Establish monitoring systems to track data usage and detect unauthorized access.

Bias and fairness

Preventing discriminatory outcomes and ensuring equitable AI performance requires proactive bias detection, diverse training data, and ongoing monitoring across different user groups.

- Diverse training data and representation: Actively seek diverse data sources and implement diversity quotas in training sets. Regularly audit data composition for gaps or overrepresentation issues.

- Bias testing and fairness metrics: Establish automated testing protocols to identify discriminatory output patterns. Implement quantitative fairness measures with acceptable performance thresholds.

- Human oversight and review processes: Maintain human approval workflows for sensitive applications and expert review requirements. Create escalation procedures for questionable outputs.

- Regular monitoring and assessment: Conduct ongoing evaluation of model performance across different demographics and use cases. Update training data and models based on bias detection results.

Regulatory compliance

Meeting industry-specific regulations requires understanding sector requirements, implementing appropriate controls, and maintaining documentation for audits and regulatory examinations.

- Healthcare compliance (HIPAA, FDA): Implement patient data protection measures, including encryption and access logging. Meet FDA requirements for medical applications through clinical validation procedures.

- Financial services (SOX, anti-discrimination): Maintain financial reporting compliance and implement fair lending practices. Establish risk management frameworks and regulatory reporting procedures.

- Education and government (FERPA, security clearance): Follow student data protection requirements, including parental consent procedures. Meet government security standards and procurement regulations.

- Documentation and audit trails: Maintain AI development, deployment, and monitoring records. Also, establish regular compliance assessments and remediation procedures.

Build vs. buy decision framework

| Aspect | Open-Source Models | Commercial APIs | Custom Development |

| Initial Cost | Low ($10K-$100K) | Medium ($5K-$50K monthly) | High ($20,000 to $500,000+) |

| Timeline | Medium (3-6 months) | Fast (2-8 weeks) | Slow (6-18 months) |

| Customization | High (full control) | Low (limited options) | Very High (complete control) |

| Technical Expertise | High (ML + DevOps required) | Low (integration only) | Very High (full AI/ML team) |

| Ongoing Costs | Medium (infrastructure + staff) | Variable (usage-based pricing) | Low (maintenance only) |

| Control & Ownership | High (complete code ownership) | Low (vendor-dependent) | Very High (total ownership) |

| Support | Community (variable quality) | Professional (dedicated teams) | Internal (self-managed) |

| Scalability | Manual scaling required | Automatic vendor scaling | Custom scaling solutions |

| Security | Self-managed security | Vendor-managed security | Complete security control |

| Updates | Community-driven updates | Automatic vendor updates | Manual update management |

From MVP to enterprise-ready AI—Folio3 supports you at every implementation stage. See Our AI Development Lifecycle

Generative AI implementation roadmap

A systematic approach to generative AI implementation increases the likelihood of success. This nine-phase roadmap provides a proven framework for transforming AI concepts into production-ready solutions.

1. Problem definition

The foundation of successful implementation lies in a crystal-clear problem definition. Vague objectives lead to scope creep, missed deadlines, and solutions that don’t address actual business needs. Start by defining the specific business challenge and establishing quantifiable success metrics.

- Define the specific business challenge you’re solving with a clear articulation of the current situation and desired outcomes.

- Establish quantifiable success metrics that can be measured throughout the project lifecycle

- Identify target users and usage context while considering technical, budget, and timeline constraints.

- Create detailed problem statements with measurable goals and specific performance targets

- Ensure stakeholder alignment through interviews, feasibility assessments, and regular checkpoints.

2. Data strategy

Your data strategy determines model quality, training efficiency, and long-term maintenance costs. Analyze data requirements and sources, including internal customer interactions and external datasets, while establishing comprehensive quality frameworks.

- Analyze data requirements from internal sources, like customer interactions, and external sources, such as public datasets.

- Establish a thorough data quality framework addressing completeness, accuracy, and consistency standards.

- Implement privacy and compliance measures, including data anonymization, consent management, and retention policies.

- Design a data pipeline architecture handling flow from ingestion through cleaning, validation, storage, and processing.

- Consider synthetic data generation for scenarios with limited real data or privacy concerns

3. Model selection

Choosing the exemplary model architecture impacts performance, costs, and maintenance requirements. When selecting between different architectural approaches and implementation strategies, consider performance requirements, technical constraints, and use case specificity.

- Consider performance requirements, including quality expectations, speed needs, and scale demands.

- Evaluate technical constraints like computational resources, latency requirements, and maintenance capabilities.

- Select architectures based on use case: transformers for text, diffusion models for images, specialized models for code.

- Choose between pre-trained APIs for standard needs, fine-tuning for domain-specific requirements, or custom development.

- When making architecture decisions, factor in timeline, budget, technical expertise, and competitive advantage.

4. Infrastructure planning

Plan computational requirements for both training and inference needs. Training requires GPU clusters and high-throughput storage, while inference needs optimized serving systems and load balancing. Choose deployment strategies based on security and scalability requirements.

- Plan computational requirements for training (GPU clusters, storage) and inference (serving systems, load balancing).

- Choose between cloud for scalability, on-premises for security, or hybrid approaches balancing both needs.

- Implement cost optimization through spot instances, auto-scaling, model optimization, and effective caching strategies.

- Consider disaster recovery, backup strategies, and edge deployment for low-latency applications.

- Evaluate managed services versus self-hosted solutions based on privacy concerns and compliance requirements.

5. Team setup

Assemble a cross-functional team including AI/ML engineers, data scientists, data engineers, product managers, DevOps engineers, and domain experts. Consider team structure options that balance standardization with implementation speed and domain specialization.

- Assemble a cross-functional team with AI/ML engineers, data scientists, data engineers, and product managers.

- Include DevOps engineers for infrastructure management and domain experts for quality assessment.

- Choose team structure: centralized for standardization, embedded for speed, or hybrid for a balanced approach.

- Define clear roles, responsibilities, communication protocols, and knowledge sharing mechanisms.

- Ensure a proper mix of technical skills, domain expertise, and project management capabilities.

6. MVP development

Focus on validating core assumptions with minimal resource investment while building a foundation for future scaling. Define the scope to include essential features, use agile methodology, and establish clear success criteria.

- Define MVP scope, including essential features, limited use cases, and basic integration requirements.

- Use agile development with 2-week sprints, daily standups, stakeholder feedback, and continuous improvement.

- Establish quality gates, including code review standards, performance benchmarks, and security checkpoints.

- Define success criteria covering functional requirements, performance thresholds, and user satisfaction.

- Focus on demonstrating value and technical feasibility for future scaling potential.

7. Testing & validation

Implement comprehensive testing covering functional, performance, and quality aspects. Conduct bias testing and A/B testing frameworks and establish proper evaluation metrics to ensure reliable and fair AI system performance.

- Implement functional testing, including unit, integration, end-to-end, and regression testing approaches.

- Conduct performance testing for load capacity, stress limits, latency measurement, and throughput assessment.

- Assess output quality through relevance, coherence, factual accuracy, and style consistency metrics.

- Implement bias and fairness testing across user groups with quantitative measurement and adversarial testing.

- Use A/B testing frameworks to compare existing processes with AI-enhanced versions using statistical importance.

8. Deployment

Execute a phased rollout, starting with limited beta testing and gradually expanding to full production. Use technical deployment strategies to ensure zero-downtime switching and comprehensive monitoring across all system components.

- Execute phased rollout from 10% beta users to 50% expansion, and finally full production deployment.

- Use technical strategies like blue-green deployment for zero-downtime switching and easy rollback capabilities.

- Implement gradual traffic shifting with canary releases and real-time monitoring of key performance metrics.

- Establish comprehensive infrastructure monitoring covering system health, application performance, and model quality.

- Include user experience monitoring through satisfaction scores and detailed usage pattern analysis.

9. Monitoring & maintenance

Establish continuous improvement through real-time performance monitoring and model quality assessment. Implement regular maintenance processes and create integrated feedback loops for ongoing optimization and stakeholder communication.

- Establish real-time monitoring of response times, error rates, resource utilization, and user engagement metrics.

- Monitor model quality for output drift, data drift, concept drift, and ongoing bias assessment.

- Implement regular maintenance, including retraining schedules, data refresh, and architecture updates.

- Create feedback loops through user input collection, performance analysis, and improvement prioritization.

- Handle opational maintenance through security updates, infrastructure scaling, and team training programs.

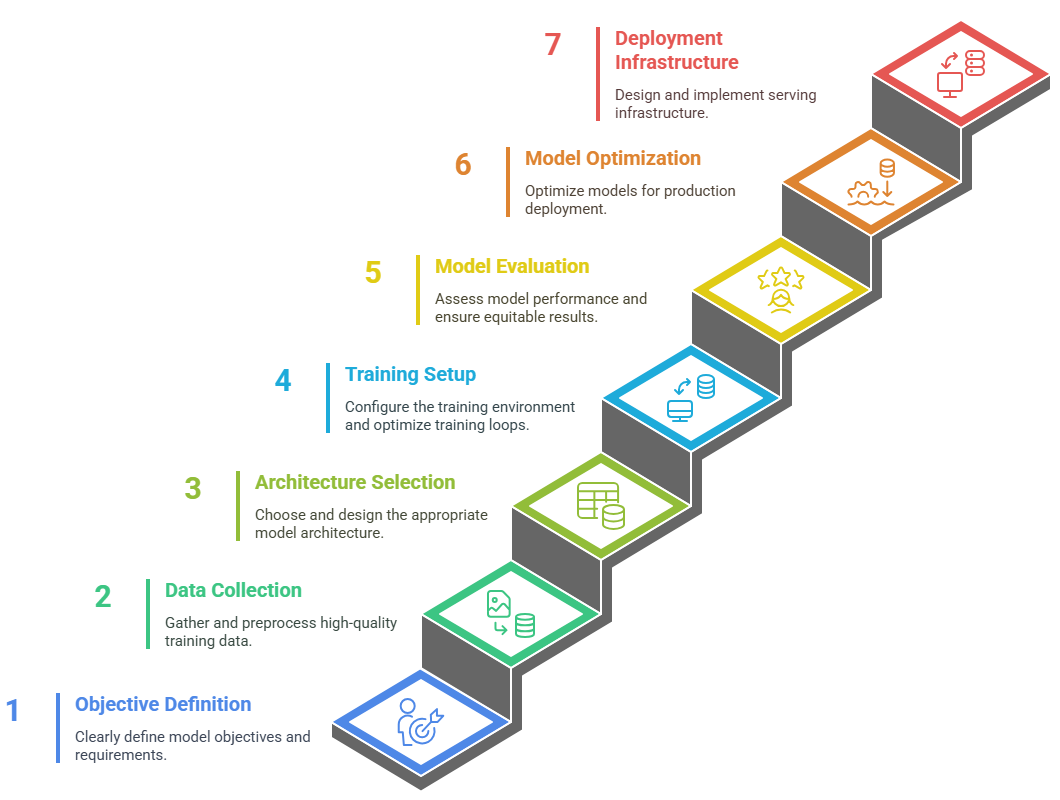

How to build a generative AI model

Building a generative AI model requires systematic technical execution from concept to production deployment, covering data preparation, architecture selection, training optimization, evaluation protocols, and serving infrastructure implementation.

Step 1: Objective definition and requirements analysis

Begin by clearly defining your model’s technical objectives and performance requirements. Specify content type, quality standards, latency constraints, and expected throughput. Establish measurable success metrics and document technical specifications that align with business requirements.

- Define technical objectives, including content type (text, images, code), quality standards, and performance requirements.

- Establish measurable success metrics such as BLEU scores for text or FID scores for image generation.

- Document input/output specifications, including data formats, size limitations, and conditioning parameters.

- Define technical constraints like maximum model size, inference time requirements, and computational budgets.

- Create an extensive specification document outlining architecture preferences and deployment targets.

Step 2: Data collection and preprocessing

Gather high-quality training data representing your target domain and use cases. Implement comprehensive preprocessing pipelines that consistently clean, normalize, and structure data while ensuring quality validation and proper data management practices.

- Collect sufficient training data. 10,000+ examples for text generation, 1,000+ for specific image styles.

- Implement preprocessing pipelines including duplicate removal, missing value handling, and format standardization.

- Apply domain-specific transformations: tokenization for text, resizing, and normalization for images.

- Establish data validation procedures, ensuring quality and consistency throughout the pipeline.

- Create proper train/validation/test splits with data versioning and documentation practices.

Step 3: Architecture selection and design

Choose an appropriate model architecture based on specific requirements and constraints. Design architecture considering computational limits, target performance, and infrastructure availability while implementing modular code for experimentation and reproducibility.

- Select architecture based on use case, like transformer models for text, diffusion models, or GANs for images.

- Design architecture considering computational constraints, target performance, and available infrastructure.

- Determine optimal configurations, including layer setup, attention mechanisms, and embedding dimensions.

- Consider transfer learning opportunities from pre-trained models to accelerate development.

- Implement modular code using PyTorch or TensorFlow with proper initialization and optimization strategies.

Step 4: Training setup and optimization

Configure the training environment with appropriate hardware and software dependencies. Design efficient training loops with proper loss functions, optimization algorithms, and monitoring systems while implementing techniques to prevent overfitting and ensure stability.

- Configure the training environment with hardware setup, dependencies, and distributed computing capabilities.

- Implement efficient data loaders handling batching, shuffling, and memory management requirements.

- Design training loops with appropriate loss functions, optimizers, and learning rate schedules.

- For stability, implement regularization techniques, including dropout, weight decay, and gradient clipping.

- Monitor training metrics closely with early stopping mechanisms and mixed precision training optimization.

Step 5: Model evaluation and validation

Establish evaluation protocols assessing both quantitative performance and qualitative characteristics. Create diverse test datasets covering edge cases and implement bias testing to ensure equitable performance across different groups.

- Implement automated metrics appropriate to the domain, like perplexity for language models, FID scores for images.

- Design human evaluation frameworks capturing subjective quality aspects like coherence and relevance.

- Create diverse test datasets covering edge cases, input variations, and potential failure modes.

- Implement bias and fairness testing, ensuring equitable performance across demographic groups.

- Ablation studies and baseline comparisons will be conducted to contextualize model performance results.

Step 6: Model optimization and fine-tuning

Optimize trained models for production deployment through compression techniques while maintaining acceptable performance levels. Implement fine-tuning strategies for domain-specific improvements and conduct iterative optimization cycles based on evaluation results.

- Optimize models through quantization, pruning, or knowledge distillation to reduce computational requirements.

- Implement model compression strategies balancing size reduction with quality preservation.

- Fine-tune on domain-specific data using parameter-efficient techniques like LoRA or adapters.

- Monitor fine-tuning progress, preventing catastrophic forgetting while improving specialized performance.

- Conduct iterative optimization combining hyperparameter tuning, architecture adjustments, and procedure refinements.

Step 7: Deployment infrastructure and serving

Design and implement serving infrastructure that can handle expected traffic loads while meeting latency and availability requirements. Package models using containerization and establish comprehensive monitoring systems for production deployment.

- Design serves infrastructure and handles traffic loads while meeting latency and availability requirements.

- Choose deployment approach: cloud-based, on-premises, or hybrid based on security and performance needs.

- Package models using containerization technologies like Docker for consistent deployment across environments.

- Implement model serving frameworks such as TensorFlow Serving, TorchServe, or custom REST APIs.

- Establish monitoring systems tracking inference performance, resource utilization, and user experience indicators.

Challenges in generative AI implementation

Generative AI implementation faces significant technical, ethical, and operational challenges that organizations must address proactively to ensure successful deployment and sustainable operations.

- High computational cost: Training and running generative AI models require substantial GPU resources, often costing thousands of dollars monthly for enterprise applications. Organizations must carefully balance model complexity with budget constraints while planning for scaling costs as usage grows.

- Ethical concerns (bias, misinformation): AI models can perpetuate biases present in training data and generate misleading or false information that appears credible. Companies must implement robust bias detection systems and content validation processes to prevent discriminatory outputs and misinformation spread.

- Data privacy and IP risks: Training models on proprietary or personal data creates potential privacy violations and intellectual property disputes. Organizations face legal liability risks and must implement strict data governance, anonymization techniques, and compliance measures throughout the development lifecycle.

- Controlling model output: Generative models can produce unpredictable, inappropriate, or off-brand content that damages reputation or violates policies. Implementing effective content filtering, output validation, and quality control mechanisms remains technically challenging while maintaining creative flexibility.

- Model interpretability and transparency: Understanding why generative models produce specific outputs is extremely difficult, creating accountability and debugging challenges. This “black box” nature complicates quality assurance, bias detection, and regulatory compliance in industries requiring explainable AI decisions.

Best practices and recommendations

Following proven implementation strategies improves success rates while minimizing risks and costs associated with generative AI deployment across organizational contexts.

- Start with narrow use cases: Implement specific, well-defined problems rather than broad applications to reduce complexity and demonstrate clear value. Focused initial projects enable faster learning, easier success measurement, and a foundation for gradually expanding AI capabilities.

- Leverage open-source and pre-trained models: Utilize existing models and frameworks to accelerate development while reducing costs and technical risks. Pre-trained models provide proven performance baselines and community support, enabling faster time-to-market than building custom solutions from scratch.

- Implement safety checks and human-in-the-loop processes: Establish human oversight and automated safety mechanisms to review AI outputs before publication or decision-making. Combining human judgment with automated filters ensures quality control while building user trust and preventing potential reputational damage.

- Stay compliant with emerging regulations: Monitor evolving AI governance requirements and implement compliance frameworks early in development rather than retrofitting later. Proactive regulatory alignment reduces legal risks and positions organizations advantageously as standards mature and enforcement increases globally.

How can Folio3 AI help in generative AI solutions?

Folio3 AI provides end-to-end generative AI development services, helping organizations implement custom solutions that drive innovation, enhance productivity, and create competitive advantages across industries.

- Custom Model Development: Build tailored generative AI models from scratch using cutting-edge architectures like transformers, diffusion models, and GANs to meet your specific business requirements and performance standards.

- AI Integration Services: Seamlessly integrate generative AI capabilities into existing systems and workflows with proper API development, data pipeline setup, and infrastructure optimization for scalable deployment.

- Domain Expertise Across Industries: Leverage specialized knowledge in healthcare, finance, retail, and manufacturing to develop compliant, industry-specific AI solutions that address unique challenges and regulatory requirements.

- End-to-End Implementation Support: Receive comprehensive support from initial consultation and strategy development through model training, testing, deployment, and ongoing maintenance with dedicated technical teams.

- Rapid Prototyping and MVP Development: Accelerate time-to-market with fast prototyping services that validate concepts, demonstrate value, and provide a foundation for full-scale implementation with minimal risk and investment.

Ready to move from exploration to execution? Let’s build your next AI-powered solution together. Get in Touch With Our AI Experts

Final words

Successful generative AI implementation requires a strategic approach that balances technical capabilities with business objectives and ethical considerations. Organizations that follow structured implementation frameworks, prioritize data quality, and maintain human oversight are significantly more likely to achieve production-scale success. The key lies in starting with narrow, high-impact use cases, building robust data foundations, and establishing clear governance processes before scaling.

Frequently asked questions

What industries are best suited for Generative AI?

Industries with high content creation needs, such as marketing, media, software development, and customer service, benefit most from generative AI implementation. Healthcare, finance, and legal sectors also see significant value in document automation and analysis, though they require additional compliance considerations. Manufacturing and retail industries leverage generative AI for product design, personalization, and supply chain optimization.

How long does it take to build a generative AI model?

Building a custom generative AI model typically takes 6-18 months, depending on complexity, data availability, and team expertise. Simple implementations using pre-trained APIs can be deployed in 2-8 weeks, while fine-tuning existing models generally requires 3-6 months. The timeline includes data preparation, model development, testing, and deployment phases.

What are the main costs involved in generative AI implementation?

Primary costs include computational resources for training and inference, specialized talent acquisition, data preparation and storage, and ongoing maintenance. Training costs range from $10,000 for simple models to millions for large custom models. Monthly operational costs include cloud infrastructure, API usage fees, and dedicated team salaries.

How do you ensure AI-generated content quality and accuracy?

Implement multi-layered quality control, including automated content filtering, human review processes, and continuous monitoring systems. Establish clear quality metrics, conduct regular bias testing, and maintain feedback loops for continuous improvement. Use domain experts for validation and implement version control to track content quality over time.

What technical expertise is required for generative AI implementation?

Core team needs include AI/ML engineers, data scientists, data engineers, and DevOps specialists with experience in deep learning frameworks. Domain expertise in your specific industry is crucial for quality assessment and use case validation. If internal expertise is limited, consider partnering with AI consultants or using managed services.

Laraib Malik is a passionate content writer specializing in AI, machine learning, and technology sectors. She creates authoritative, entity-based content for various websites, helping businesses develop E-E-A-T compliant materials with AEO and GEO optimization that meet industry standards and achieve maximum visibility across traditional and AI-powered search platforms.