Computer vision enables computers to function like the human visual system. It comes under the subset of artificial intelligence and is especially important because it allows efficient and accurate extraction of information from images and videos. This rapidly processed information is used to improve decision-making capacities in all industries.

Computer vision is particularly important because it can be of great value to practically every industry by making their systems more efficient and organized.

The importance of computer vision is well highlighted in some of its major uses. Firstly, computer vision is used in medical diagnosis.

Image classification and pattern detection, both of which come under computer vision, are used by CT machines when detecting cancer.

Computer vision is also used in factory management, retail, and security systems because of its facial recognition ability. Computer vision is also used by ecologists and wildlife biologists to track rare species and gather data. Lastly, it is used in self-driving cars.

Due to all of its uses and the fact that it generally boosts system efficiency and decision-making capacity, computer vision is an extremely important part of AI.

Let’s start with the basic problems of computer vision.

What is Image classification?

When an image is taken as an input, labeling the image with a class name (i.e car, cat) is known as image classification.

Image classification or image recognition is an example of a computer vision task. It refers to predicting the class of an object in an image. It is essentially matching one or more labels to an image.

Image classification consists of inputs and outputs. The inputs are the images fed into the computer as data and the outputs are class labels that help differentiate the images.

There are two types of image classification, single-label classification, and multi-label classification.

Single-label classification:

This is the most common type of image classification. In single-image classification, each image has one label.

Multi-label classification:

This is more complex than single-label classification. In multi-label classification, each image may have more than one label. Examples of this are in the medical field, where visual data in the form of X-Rays or CT scans can diagnose multiple diseases for one patient.

Now what is object localization?

In an image, drawing a bounding box around a specific object is called object localization.

Object localization means recognizing the existence of one or more objects in an image and marking the location with a bounding box.

Object localization consists of inputs and outputs. The inputs are the images that have objects that need to be located. The outputs consist of the bounding boxes that indicate the location of an object.

What about object detection?

Object detection is the ability to both classify and locate objects in an image. It is a combination of both image classification and object localization.

Object detection consists of inputs and outputs. The input is an image with one or more object present. The output is multiple bounding boxes to demark the objects’ location and label each bounding box to classify it.

Object detection covers both the above concepts. It first classifies objects in the image and creates a bounding box around the object and gives it a class label. One difference it has with image classification is that it classifies all the present objects in the image while image classification classifies the whole image as a class label.

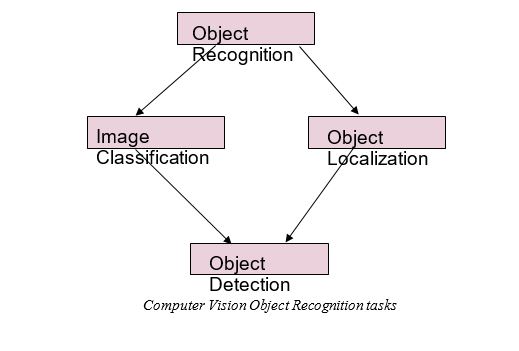

Lastly what is object recognition?

Object recognition is about all the above explained concepts. It includes image classification, object localization and object detection in its definition.

Object recognition refers to classifying and locating objects in an image with a certain degree of accuracy.

Object recognition and object detection are similar techniques, but they differ in the way they operate. In deep learning, object detection comes under the category of object recognition. Object detection focuses more on locating and classifying objects in an image, whereas object recognition mainly focuses on identifying objects.

Object recognition consists of 4 processes:

- Image classification

- Tagging

- Object detection

- Segmentation

Classification and tagging refer to labeling objects in an image with their particular class/tags, to simplify, these two processes focus on identifying the contents of an image.

Detection and segmentation refer to finding the location of objects in an image after the particular objects have been recognized. In object detection, the output is a rectangular bounding box while in segmentation, individual pixels of an object are identified to create a more precise map.

Object recognition can take place through machine learning or deep learning (a specialized form of machine learning). Machine learning and deep learning will give the same outputs but differ in their methodology.

In machine learning, after the input of images, an extra process of feature engineering occurs, which does not happen in deep learning. Feature engineering requires manual input.

In deep learning, feature engineering is redundant. Due to this, systems based on deep learning require less human input but need a greater number of resources.

Whether a user will incorporate machine learning or deep learning into their system depends on the amount of data and the strength of hardware they have. If a user has a lot of data and strong hardware, deep learning can be used, otherwise, machine learning should be used.

The flow chart will give a better understanding of object recognition.

How Does Object Recognition Work?

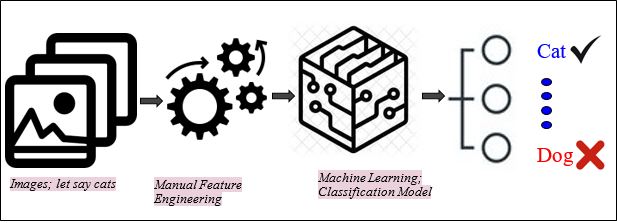

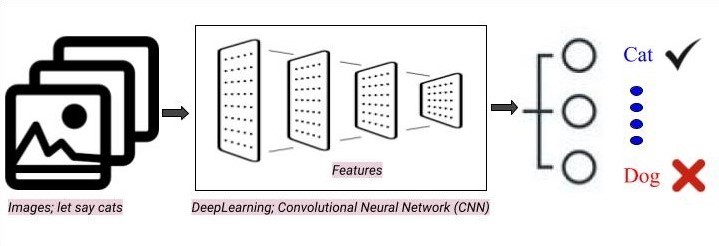

There are multiple approaches which can be used for object recognition.The main two approaches are machine learning and deep learning. The output results are more or less the same but they differ in method of execution. Consider the diagrams below for better understanding.

Machine Learning:

In machine learning, after the images are taken as input an additional step of feature engineering is executed compared to deep learning. The feature engineering needs manual input. Then the features are sent into machine learning models as in classification model and model training is executed.

Deep Learning:

While in deep learning, the step of feature engineering is not needed as the model itself includes feature extraction and classification. Deep learning models need lesser human input but require higher resources.

The question that arises is how to know which approach to go with. There are few things which should be considered. Like if in the problem which feature will give best results are known and resources like powerful gpu isn’t available then the best approach is machine learning technique but if the features are not known and a large training dataset is available with good gpu then the preferable technique is deep learning method.

● Some Other Techniques

- Template Matching; It is a technique where an input image is taken and smaller image parts called templates are matched with it. One drawback of why this isn’t a preferred approach is that you need to know what features you are looking for, it doesn’t do good for dynamic features.

- Image Segmentation and Blob Analysis; Inthis approach it works with finding shape, colour and size features. It segments the image into pixels and then works on finding locations of features. If the image has noise, variant contrasts and boundaries this approach won’t be the most feasible option.