A big aspect of machine learning is optimization. Almost all machine learning algorithms have optimization algorithms at their core. In the present age, businesses are able to enjoy benefits by using machine learning solutions so as to enhance their capabilities as well as by using highly customized machine learning algorithms, organizations are enabled to maximize their functionality.

There are many benefits that machine learning solutions can provide such as speech-to-text, computer vision applications to scale up visual data analysis, natural language processing to drive better values from your data, predictive analysis for making smarter decisions, etc.

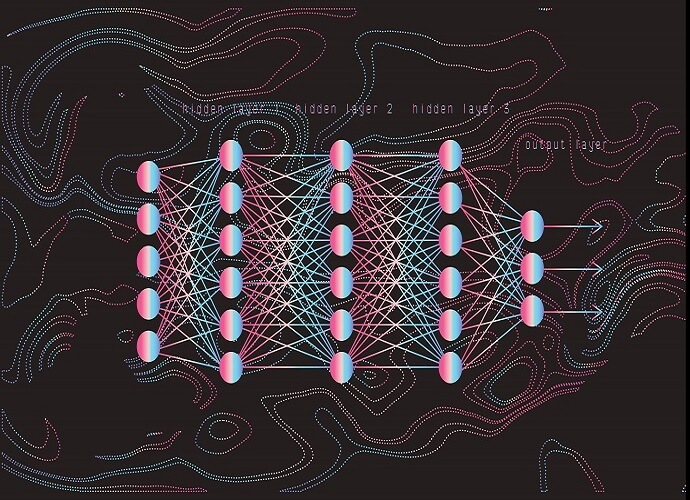

Gradient descent optimizer is an optimization algorithm that is basically used so as to reduce some functions by repetitively moving in the direction of descent that is steepest as explained by the gradient’s negative. We make use of gradient descent optimizer for machine learning to update our models’ parameters i.e. weights in neural networks and coefficients in linear regression.

To simply put TensorFlow gradient descent optimizer is used to find out the values of coefficients (parameters) of a certain function (f) which reduces a cost function (cost).

The use of TensorFlow gradient descent optimizer is best when the calculation of parameters cannot be done analytically, such as by using linear algebra, and it becomes necessary to make use of an optimization algorithm to search for their values.

How to Setup Regression Using Gradient Descent Optimizer in Tensorflow

First thing first. Load the necessary libraries.

Follow this URL for complete code.

How to Load a Dataset from Gradient Descent Optimizer in Tensorflow

Load data sets and run checks on stats.

Now follow this URL for complete data set code.

How to Build Your First Model

You want to predict the salary which will be your label. So, you will use projects as your input features and in order to train your model, you will use the linearRegressor interface that is given by the TensorFlow Estimator API. Many low-level model fixings are usually taken care of by this API and it provides suitable methods for training your model, performing evaluation and inference.

Step 1 – Defining Features and Configuring Feature Columns

There is a construct called feature column in TensorFlow that is basically used to indicate the data type of the feature. They do not have already added feature data.

To begin, you will use a single numeric input feature i.e. projects.

Step 2 – Defining The Target

In this step, you will state your target i.e. salary. This can also be extracted from your data frame.

Step 3 – Configuration of the LinearRegressor

Here you will configure a linear regression model by using the LinearRegressor, and this model will be trained by using gradient descent optimizer which is responsible for implementing mini-batch Stochastic Gradient Descent (SGD). The size of the gradient step is controlled by the learning_rate.

Step 4 – Defining The Input Function

In order to perform salaray data import into LinearRegressor, you are required to define an input function. This is actually to instruct TensorFlow about how to preprocess the data and also how to shuffle, batch, and repeat it during the training of the model.

Begin with converting your pandas feature data into NumPy arrays. After which you can use the TensorFlow dataset API for constructing a dataset object from your data followed by breaking up of the data into batches of batch-size that are to be repeated for a certain number of epochs (num_epochs).

Moving forward, if shuffle is set to True, you are required to shuffle the data so as to pass it randomly to the model during its training. The buffer_size argument is responsible for specifying the size of the dataset from which shuffle will sample randomly.

Lastly, your input function will be done constructing an iterator for the dataset and gives back the next batch of data to the LinearRegressor.

Step 5 – Training The Model

For training your model, you can call train() now on your LinearRegressor. You are required to wrap my_input_fn in a lambda so that it can be passed in my_feature and targets as arguments. To start, you will train for a hundred (100) steps.

How to Optimize Model in TensorFlow?

For this function, we are going to move forward in ten fairly divided periods in order to understand the improvement of the model in a better way.

For every single period, we will be computing and graph training loss. It helps to know when a training model is converged and whether or not it needs any more iterations.

Plotting feature weight and bias term values that are learned by the model can laso help you to understand the process of convergence better.

Take Away

Some essentials you must always remember:

- Optimization is the most essential aspect of machine learning.

- Gradient descent optimizer is an optimization algorithm that can be used with various machine learning algorithms.

- The use of gradient descent optimizer is best when the calculation of parameters cannot be done analytically, such as by using linear algebra, and it becomes necessary to make use of an optimization algorithm to search for their values.

If you have any questions about TensorFlow Gradient Descent Optimizer for machine learning, contact us today and we will address your concerns right away.

FAQs

What is convergence in TensorFlow ai?

It refers to a process or state that is reached during a model training where validation loss and training loss experience some changes or no changes at all with every single iteration after a specified number of iterations. Simply put, a model reaches the convergence state when training it any further on the existing data stops improving the model any more. In deep learning, the values of loss oftentimes stay constant even after many iterations before they start to descend. This can be misleading by giving you a false sense of convergence.

What is TF train Adam optimizer for machine learning?

TensorFlow train Adam optimizer is basically an optimization algorithm that can be used in place of classical stochastic gradient descent TensorFlow in order to update network weights iterative based in training data.

These are some benefits of using Adam optimizer:

- Straightforward in terms of implementation.

- It is computationally efficient.

- Its memory requirements are little.

- It is best to use for problems that are bigger in terms of parameters and/or data.

- Suitable for objectives that are non-stationary.

- Suitable for problems that are noisy or sparse gradients … and so on.

What is the TensorFlow optimizer for beginners?

TensorFlow is known as the second machine learning framework that is created by Google and it is used to build, design, and train models involving deep learning. TensorFlow library can be used to perform numerical computations that are done using data flow graphs.

Start Gowing with Folio3 AI Today

We are the Pioneers in the Gradient Descent Optimizer Arena – Do you want to become a pioneer yourself?

Please feel free to reach out to us, if you have any questions. In case you need any help with development, installation, integration, up-gradation and customization of your Business Solutions. We have expertise in Machine learning solutions, Cognitive Services, Predictive learning, CNN, HOG and NLP.

Connect with us for more information at Contact@folio3.ai