Executive Summary:

Artificial intelligence (AI) has been used to rebuild human and animal motion sequences using video recordings. This is accomplished by utilizing methods that allow computers to examine and comprehend the content of images and videos, such as computer vision and machine learning. One well-liked method is deep learning algorithms, which can identify and categorize many movements and patterns in video data. For instance, scientists have trained neural networks to identify various human motions, including walking, running, and jumping, and to reconstruct these motions in three dimensions. We will discuss the idea of AI motion reconstruction and its potential uses in this article.

Consider that we are on safari and watching a giraffe munch on grass. The animal lowers its head and sits after we briefly turn our attention elsewhere. Yet, we are curious as to what took place then. Computer scientists at the University of Konstanz’s Center for the Advanced Research of Collective Behavior have developed a method to encode an animal’s posture and appearance to display intermediate motions that are statistically probable to have taken place.

The fact that images are so complicated is a major issue with computer vision. For example, a giraffe can take on an incredibly broad range of stances. A portion of a movement sequence is typically not a problem during a safari, but this knowledge can be crucial when researching group behavior. Here’s where computer scientists step in with their new “neural puppeteer” concept.

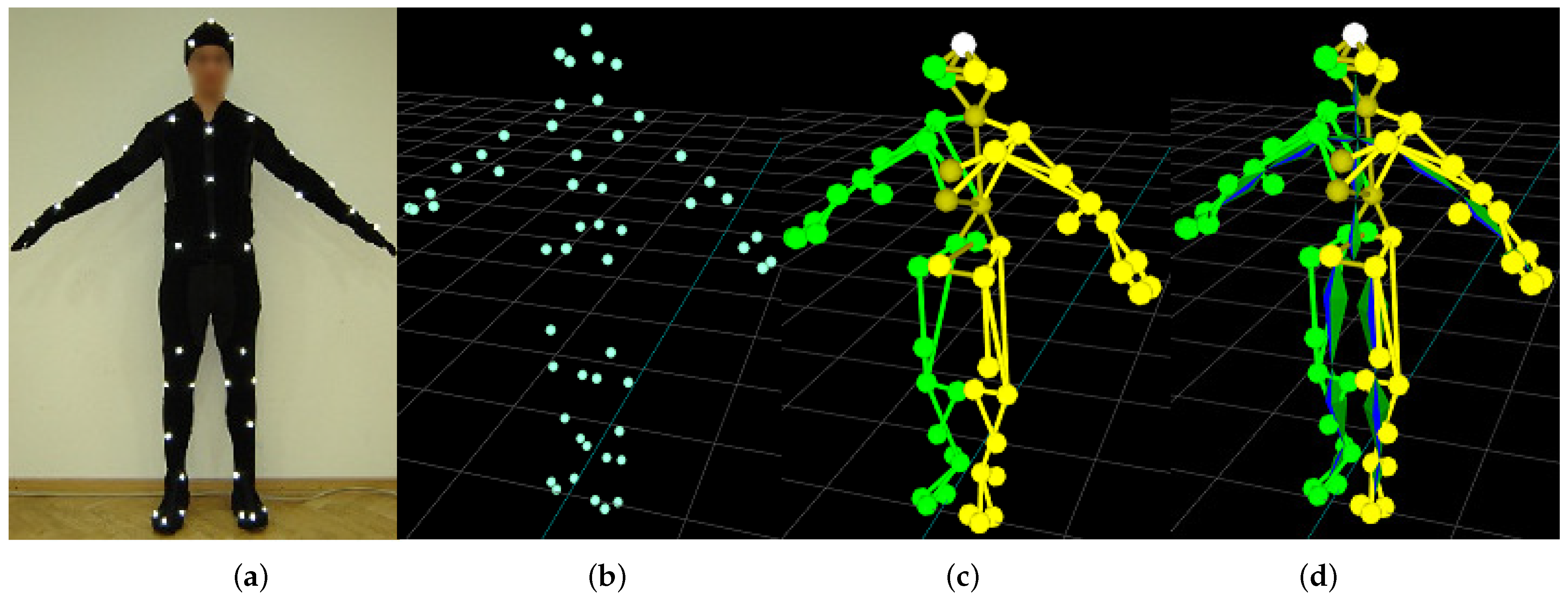

Using 3D points to create predictive outlines

Professor of computer vision at the University of Konstanz Bastian Goldlücke states, “One of the goals in computer vision is to describe a tremendously complicated image space by recording only as few characteristics as possible. The skeleton is one of the pictures used a lot so far. A neural network model that enables the representation of motion sequences and the complete view of animals from any perspective is presented by Bastian Goldluecke, Urs Waldmann, and Simon Gibenhein in a recent paper that was published in the Proceedings of the 16th Asian Conference on Computer Vision. only a few key ideas. Compared to conventional skeletal models. First, the 3D perspective is more adaptable and precise.

The goal, according to postdoctoral researcher Urs Waldmann, “was to be able to forecast 3D important locations and also be able to monitor them regardless of tissue.” We developed an AI system that uses 3D key points to forecast silhouette images from any camera angle. Determining skeleton points from contour photos is also possible by performing the technique backwards. The AI system can determine intermediate steps statistically likely based on the key information. It may be crucial to use a personal outline. This is so that you can see if the whale you’re looking at is huge or on the verge of hunger if you only work with skeletal whales.

This paradigm has been used, particularly in the realm of biology. For example, we observe that many different species of animals are tracked at the Center for Advanced Research of Collective Behavior in the Cluster of Excellence, and positions must also be predicted in this context, according to Waldman.

Long-term objective. Use as much wildlife data as you can in the system.

The group started by predicting human, pigeon, giraffe, and cow silhouettes. According to Waldman, test subjects for computer science are frequently people. For example, his peers use pigeons in the Cluster of Excellence. Their sharp claws, however, present a significant obstacle. Cows had good model data, but Waldman was eager to take on the task of the giraffe’s extraordinarily long neck. The group produced silhouettes based on several crucial factors, ranging from 19 to 33.

The field of computer science is now prepared for practical applications. Future data on insects and birds will be gathered at the Imaging Hanger of the University of Konstanz, the largest laboratory for studying collective behavior globally. In the Imaging Hanger, environmental factors like lighting and background are easier to manipulate than outside. To gather new knowledge about animal behavior, the long-term objective is to train the model on as many different types of wild animals as feasible.

Conclusion:

AI motion reconstruction can transform how we approach motion study and provide fresh perspectives on how Animal Detection Solution operates and how living things move. It is a useful tool for various applications, including sports, health, animation, robotics, and video surveillance, because it can learn from enormous datasets and reconstruct complex movements. So it’s crucial to address the ethical issues the technology raises and make sure it’s applied ethically and for the right reasons. AI motion reconstruction must be developed further to reach its full potential and help society. This requires continued investment in research and development.

FAQs:

How does animal AI function?

Artificial insemination (AI) physically inserts sperm cells from a male animal into a female reproductive canal. Due to its numerous advantages, artificial insemination frequently replaces natural mating in many species of animals.

How accurate is AI at recognizing animals in pictures when compared to humans?

More significantly, our approach can automate Animal Detection Solution for 99.3% of the data while maintaining 96.6% accuracy, outperforming crowdsourced teams of human volunteers and saving >8.4 years (i.e., >17,000 h @ 40 h/wk) of human labeling labor on.

How is AI enabling humans to communicate with animals?

Robots with artificial intelligence capabilities can communicate with animals and break through the barrier preventing interspecies communication. With honeybees, dolphins, and to a lesser extent, elephants, researchers conduct this research in a very primitive manner.

Can we interact with animals using AI?

Scientists observe and decipher how many species, including plants, share information through communication systems. They are doing this using cutting-edge sensors and artificial intelligence technologies.

How is AI image detection implemented?

Similar to how people do it, image recognition analyses each pixel in an image to extract pertinent information. A wide variety of objects can be detected and recognized by AI cameras using computer vision training.