Introduction

Meta’s Llama 3.2 model is a landmark advancement in AI, especially for edge and on-device applications. With a range spanning large-scale vision models (with 11B and 90B parameters) to lightweight text-only models (1B and 3B parameters), Llama 3.2 opens new possibilities for real-time, private AI use across various industries.

In this blog, we will discuss how these models function, their real-world applications, and what distinguishes them in multimodal AI.

About Llama 3.2 Vision Models

Llama 3.2’s vision models, equipped with 11 billion and 90 billion parameters, offer a powerful framework designed for complex image interpretation tasks. These models bring high-level image processing to the forefront, supporting applications like document analysis, caption generation, and visual reasoning. For example, a retail manager could use these models to generate insights from visualized sales data, while a travel enthusiast could navigate challenging trails by interpreting visual markers—all thanks to Llama 3.2’s sophisticated image and text processing capabilities.

Expanded Multimodal Capabilities

The 11B and 90B models in Llama 3.2 are multimodal, supporting both text-only (input/output) and text + image (input) with text output. As the first Llama models capable of handling vision tasks, they feature a unique architecture that integrates an image encoder with the language model, offering flexible solutions for diverse use cases.

- Unpacking the 90B Vision Model: Meta’s Most Advanced AI for Enterprise

The 90B Vision model is Meta’s most advanced tool for enterprise applications. Tailored for large-scale use, this model excels in tasks that require text generation and sophisticated image reasoning. It performs exceptionally well in areas such as comprehensive knowledge synthesis, long-form content generation, coding, multilingual translation, mathematical reasoning, and problem-solving.

The model’s multimodal capability allows it to process visual data alongside text, supporting tasks like image captioning, visual question answering, and document-based image-text retrieval. This functionality is especially valuable for businesses that require detailed image analysis and language processing, as it can respond to queries involving images, retrieve related image-text pairs, interpret visuals in documents, and ground textual information in visual content. - The 11B Vision Model: Versatile AI for Content Creation and Conversational Use

Complementing the 90B model, the 11B Vision model is optimized for versatile, interactive applications. While it shares many capabilities with the 90B model, it is particularly effective in content generation and conversational AI. This model supports tasks like summarizing long texts, interpreting sentiment, generating code, and following complex instructions, with the added benefit of image-based reasoning. The 11B Vision model is ideal for enterprises needing both language and visual comprehension in a more accessible and adaptable format, performing robustly across tasks like image captioning, visual question answering, and document analysis through image-text retrieval. - Enhanced Efficiency and Language Support

Llama 3.2’s vision models have been fine-tuned for efficiency, reducing latency, and enhancing performance for a wide range of AI workloads. They maintain the 128,000-token context length introduced in Llama 3.1, allowing them to process extensive inputs seamlessly. Additionally, the models offer multilingual support across eight languages—English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai—broadening their accessibility for global applications.

Llama 3.2 Vision Model Architecture: Powered by a Robust Image-Enhanced Language Model

At the core of Llama 3.2 Vision’s design is the Llama 3.1 text-only model, an auto-regressive language model leveraging a fine-tuned transformer architecture. To effectively incorporate image recognition, Llama 3.2 Vision models include a specialized vision adapter that integrates visual data with the language model. This adapter consists of cross-attention layers that channel image encoder outputs into the language model, creating a smooth flow of information between visual and textual data.

This cross-attention mechanism enables the model to produce coherent, context-sensitive outputs for both text and image inputs. Llama 3.2 has also been refined through supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF), which align its responses with user expectations for accuracy, relevance, and safety.

How Llama 3.2 Vision Models Operate

Llama 3.2 Vision models seamlessly handle multimodal processing by combining a language model with an image encoder to process varied inputs. Key elements of the architecture include:

- Image Encoder

This component is responsible for analyzing images and extracting essential features, breaking down complex visual data into structured representations. It identifies patterns, shapes, textures, and objects, transforming visual information into a form the language models can interpret alongside text. - Cross-Attention Mechanism

Cross-attention layers align visual data with text-based information, supporting a comprehensive understanding of both. This mechanism allows the language model to “see” and “describe” visual elements in a contextually aware manner, seamlessly integrating image features into the textual processing framework. - Rich Multimodal Interaction

Llama 3.2 Vision models handle a range of complex multimodal tasks, from generating descriptive captions for images to answering questions based on visual inputs and retrieving relevant text-image pairs. This layered structure enables the model to produce accurate, contextually relevant responses for customer inquiries, visual data interpretation, and document-based visual analysis.

Applications of Llama 3.2 Vision Models Across Industries

Llama 3.2 Vision models are versatile, making them suitable for specialized domains:

- Healthcare Diagnostics

In medical imaging, these models assist in early diagnostics by identifying potential abnormalities in scans, such as X-rays or MRIs. For instance, a radiology tool could use the model to highlight areas of concern, providing preliminary insights that streamline healthcare providers’ analysis. - E-commerce and Retail

In retail, Llama 3.2 can optimize inventory management by categorizing and tagging products via image recognition. A retail app might leverage this to auto-describe new products, classify items, or even suggest styles based on the visual features of a catalog. - Education and Accessibility

Llama 3.2 can enhance accessibility by reading documents aloud, describing images, or guiding visually impaired users through visual prompts. In educational settings, this functionality enables more inclusive learning, making visual content accessible to all students.

A Unique, Adaptable Architecture

A defining characteristic of Llama 3.2’s vision models is their open, modular design. Although only eight languages are directly supported, Llama 3.2 has been trained on a broader language collection, allowing developers to extend the model’s linguistic capabilities in compliance with the Llama 3.2 Community License and Acceptable Use Policy.

The adaptable architecture also lets developers fine-tune these models, tailoring them to specific tasks and industries. This flexibility makes Llama 3.2 a versatile choice for companies seeking customizable AI solutions that can evolve alongside their needs.

Benchmarks: Pros and Cons

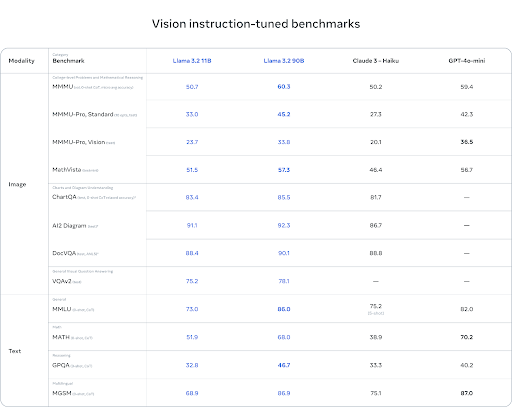

Llama 3.2 vision models have demonstrated superior performance on industry benchmarks, outshining certain proprietary models in areas like image understanding and captioning.

However, like any advanced model, they have strengths and trade-offs, such as requiring significant memory for larger models but offering robust performance on complex visual tasks.

The metrics are as follows:

Llama 3.2 1B & 3B Lightweight Models: Real-Time, On-Device AI for Versatile Applications

Meta’s Llama 3.2 series introduces its smallest models with 1 billion (1B) and 3 billion (3B) parameters, providing significant AI power packed into lightweight frameworks specifically designed for on-device applications. These models are tailored for environments where data privacy, efficiency, and low latency are crucial. From content generation to mobile device applications, the 1B and 3B models bring the capabilities of advanced language models directly to users’ hands without depending on high-resource servers.

On-Device AI: Private, Responsive, and Real-Time

The 1B and 3B models enable powerful AI capabilities on personal devices like smartphones, tablets, and IoT systems, allowing for immediate responses while maintaining user privacy. Unlike larger models that typically require cloud resources, these lightweight models function fully on-device, which means user data remains local, and the need for constant internet connectivity is minimized. This setup ensures a real-time, seamless user experience and eliminates concerns about data privacy and potential breaches, making these models ideal for privacy-focused applications.

Applications of Llama 3.2 1B & 3B Models

The Llama 3.2 1B and 3B models are designed to handle a variety of tasks with significant adaptability across industries:

- Conversational AI: These models are ideal for powering interactive chatbots and virtual assistants, which can hold engaging conversations, respond to user queries, and offer real-time support without needing cloud processing.

- Content Generation: Whether it’s drafting emails, creating brief summaries, or generating code snippets, the 1B and 3B models make it possible to create meaningful content with minimal delay.

- Real-Time Language Translation: For users needing instant translation, these models can translate text across multiple languages directly on the device, supporting smooth, on-the-go communication.

- Sentiment Analysis and Customer Feedback: In apps where understanding user sentiment is critical, these models can analyze text data from reviews, social media, and customer feedback in real-time to provide insights into customer satisfaction and trends.

- Speech-to-Text Processing and Transcription Services: The models can be employed in audio-based applications to transcribe spoken language into text instantly, useful for note-taking, accessibility features, and live captions.

- Personalized Recommendations: They are capable of generating personalized recommendations or summaries of user-preferred content by analyzing browsing or usage patterns in real-time.

How the Llama 3.2 Lightweight Models Operate

The impressive efficiency and adaptability of the Llama 3.2 lightweight models come from three core processes: pruning, distillation, and final refinement. These steps help maintain model performance while reducing computational demands.

1. Pruning: Slimming Down the Model

Pruning is the first step in the model reduction process, where unnecessary neurons, parameters, or connections within the model are removed. This is done to cut down the model’s size, making it more manageable without heavily compromising its accuracy. For example:

- Structural Pruning involves removing entire layers or blocks within the model that have minimal impact on its predictions.

- Neuron Pruning removes individual neurons that contribute little to the overall output.

This process requires careful balancing to ensure that the model’s performance remains consistent even with reduced parameters. By pruning extraneous parts of the model, the size and resource requirements of the Llama 3.2 1B and 3B models are significantly reduced, which is crucial for enabling these models to operate efficiently on smaller devices.

2. Distillation: Teaching Small Models from Larger Ones

After pruning, the next step is distillation, where a large, fully trained model (often called the “teacher” model) is used to transfer knowledge to the smaller “student” model. The student model learns to predict outputs based on the teacher’s predictions, effectively inheriting the teacher’s learned patterns and behaviors in a more compact form. Distillation techniques include:

- Soft Label Training: Rather than learning from raw data, the smaller model learns from the teacher’s outputs or “soft labels,” capturing the teacher’s decision-making process.

- Knowledge Transfer Layers: Certain layers in the teacher model are emulated in the student model, allowing for targeted focus on essential features.

This process allows the student model to achieve performance levels close to the teacher, even with far fewer parameters, making the lightweight 1B and 3B models practical for edge devices.

3. Final Refinement: Fine-Tuning for Targeted Applications

Once the lightweight model has been pruned and distilled, it undergoes final refinement or fine-tuning. This step adjusts the model based on specific application needs and real-world data, optimizing it for particular tasks such as customer support or document summarization. Fine-tuning involves:

- Task-Specific Training: Exposing the model to focused datasets that reflect its intended use case, such as customer service responses or summarization tasks.

- Error Correction and Adjustment: Using iterative training to correct any inaccuracies that may have surfaced after pruning and distillation.

Final refinement helps boost the model’s accuracy in real-world applications, allowing it to perform efficiently in industry-specific tasks despite its smaller size. This step also enables developers to customize the model, enhancing performance for particular use cases without needing additional resources.

Benchmarks: Pros and Cons

The Llama 3.2 vision models perform admirably against leading models such as Claude 3 Haiku and GPT4o-mini in tasks involving image recognition and visual comprehension. The 3B model surpasses Gemma 2 (2.6B) and Phi 3.5-mini in aspects like following instructions and summarizing, whereas the 1B model stays competitive with Gemma.

Llama 3.2 1B & 3B Models: A Versatile Choice for Edge AI

Thanks to these rigorous optimization processes, the Llama 3.2 lightweight models provide businesses with a customizable, private, and efficient AI option for on-device use. From conversational agents that engage users in real-time to recommendation engines that adapt to personal preferences without data leaving the device, the 1B and 3B models empower industries with private, responsive AI suitable for edge environments.

Llama Stack Distribution: Streamlining Llama Model Deployment

The Llama Stack Distribution represents Meta’s initiative to create a standardized and flexible API interface, optimizing Llama models for use across multiple platforms and environments. In July’2024, Meta introduced the Llama Stack API as a proposed standard for customizing and deploying Llama models in different applications, from real-time on-device AI to robust cloud-based solutions.

This API has since been refined with the support of community feedback, resulting in a fully functional API ecosystem tailored for model fine-tuning, synthetic data generation, and streamlined deployment across diverse infrastructures.

Meta has built a reference implementation of the Llama Stack API, covering three key functionalities: inference, tool integration, and retrieval-augmented generation (RAG). This implementation allows developers to create agent-based applications with a unified, consistent API experience. As part of this effort, Meta has partnered with multiple providers to ensure the API can adapt seamlessly across different deployment scenarios.

By introducing the Llama Stack Distribution, Meta now offers an optimized suite of API providers, bundled as a cohesive package for ease of use. This distribution package enables developers to access Llama models from a single endpoint, simplifying integration across environments including cloud, on-premises, single-node setups, and even mobile devices.

Goals and Benefits of the Llama Stack Distribution

The Llama Stack Distribution was designed with specific goals in mind to address the needs of developers and enterprises seeking efficient, adaptable AI solutions:

- Standardization: The set of APIs creates a consistent interface and environment for developers, simplifying the process of adapting applications as new models are released. This standardization promotes faster development and easier integration across projects.

- Synergy and Modularity: By encapsulating complex functionalities into modular APIs, Llama Stack Distribution enables seamless collaboration between tools and components, fostering a flexible environment where developers can pick and choose the best functionalities for their needs.

- Streamlined Development: Llama Stack Distribution accelerates the development lifecycle by offering predefined core functionalities that reduce setup time and speed up deployment. With these tools, developers can focus on refining model applications rather than building supporting infrastructure from scratch.

Key Components of the Llama Stack Distribution

The comprehensive set of tools and resources within the Llama Stack Distribution includes:

- Llama CLI: The Llama Command Line Interface (CLI) is a powerful tool designed to help developers build, configure, and operate Llama Stack distributions effectively. With the CLI, developers can manage model deployments, fine-tune configurations, and monitor their Llama applications, streamlining development workflows.

- Cross-Platform Client Libraries: Client code is available in several languages, including Python, Node.js, Kotlin, and Swift. This cross-language compatibility allows developers to build Llama-based applications in their preferred programming environments, broadening the adoption of Llama models across diverse developer ecosystems.

- Docker Support: To facilitate flexible deployment, Meta provides Docker containers for both the Llama Stack Distribution Server and the Agents API Provider. These containers enable developers to deploy Llama models in isolated environments, offering consistent performance and compatibility across different systems.

- Multiple Distribution Options:

- Single-Node Llama Stack Distribution: This option, available via Meta’s internal implementation and through a partnership with Ollama, allows for running Llama models on a single computing node, ideal for testing and small-scale applications.

- Cloud-Based Distributions: For scalable, high-performance use, cloud distributions of Llama are available via partnerships with major cloud providers like AWS, Databricks, Fireworks, and Together. These options provide robust support for large-scale deployments, enabling enterprises to leverage Llama’s capabilities within their existing cloud infrastructure.

- On-Device Llama Distribution: Recognizing the growing demand for real-time, private AI on mobile devices, Meta has made Llama accessible on iOS through PyTorch’s ExecuTorch, optimizing it for on-device inference. This option allows developers to integrate Llama models directly into mobile applications, providing users with real-time AI processing without relying on external servers.

- On-Premises Llama Distribution: For organizations that require full control over data and infrastructure, Meta offers an on-premises distribution supported by Dell. This allows businesses to deploy Llama models within their secure, internal networks, ensuring compliance with stringent data privacy regulations.

- Single-Node Llama Stack Distribution: This option, available via Meta’s internal implementation and through a partnership with Ollama, allows for running Llama models on a single computing node, ideal for testing and small-scale applications.

The Llama Stack Distribution exemplifies Meta’s commitment to supporting developer and enterprise needs by providing flexible, customizable solutions for deploying AI models across a wide range of environments.

A Commitment to Safety

Ensuring the safety and ethical use of AI is a priority for Meta, and Llama 3.2 incorporates robust measures to align with these principles. Through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF), the model is guided to prioritize responses that are safe, relevant, and helpful.

This dual-process fine-tuning approach allows Llama 3.2 to respond appropriately to a variety of sensitive scenarios, filtering out inappropriate content and minimizing the risks of biased or harmful outputs.

Meta has also introduced improved safety protocols within Llama 3.2 to safeguard privacy and limit the exposure to sensitive information. The model includes mechanisms to mitigate potential biases and is trained to adhere to ethical guidelines, reducing the chance of propagating harmful stereotypes or misinformation.

Additionally, the open development process around Llama 3.2 encourages feedback and iterative improvement, allowing developers to help enhance safety features continuously. These safety measures make Llama 3.2 a secure choice for applications that involve direct user interaction, ensuring a responsible AI experience.

Accessing Llama 3.2 Models

Accessing and downloading Llama 3.2 models is straightforward. Here are the key platforms and options available:

- Official Llama Website:

- Direct download of Llama 3.2 models.

- Includes both lightweight models (1B and 3B) and larger vision-enabled models (11B and 90B).

- Hugging Face:

- Another platform where Llama 3.2 models can be accessed.

- Popular among developers in the AI community for easy access to various AI models.

- Partner Platforms:

- Llama 3.2 models are also available for development on a wide range of partner platforms, including:

- AMD

- AWS

- Databricks

- Dell

- Google Cloud

- Groq

- IBM

- Intel

- Microsoft Azure

- NVIDIA

- Oracle Cloud

- Snowflake

- And others

- Llama 3.2 models are also available for development on a wide range of partner platforms, including:

These options provide developers with flexibility in choosing the most suitable platform for their needs.

Conclusion

With Llama 3.2, Meta is delivering a highly versatile, accessible, and safe AI framework that can support a wide range of applications across industries. From its robust vision models to its lightweight, privacy-centric text models, Llama 3.2 represents a bold step forward in edge AI. This commitment to openness ensures that developers and organizations alike have the tools they need to bring transformative AI experiences to their users.

Areeb is a versatile machine learning engineer with a focus on computer vision and auto-generative models. He excels in custom model training, crafting innovative solutions to meet specific client needs. Known for his technical brilliance and forward-thinking approach, Areeb constantly pushes the boundaries of AI by incorporating cutting-edge research into practical applications, making him a respected developer in folio3.