- Introduction:

Researchers at Meta AI developed a groundbreaking Segment Anything Model (SAM) for image segmentation. This tool offers unmatched flexibility and adaptability and represents a fundamental change in handling visual data analysis.

At its core, SAM is designed to simplify the segmentation process by serving as a foundational model that can be prompted with various inputs, including clicks, bounding boxes, or text. This eliminates the need for task-specific modeling expertise and custom data annotation, making image segmentation accessible to a broader range of users and applications.

Understanding Object Detection Vs. Image Segmentation

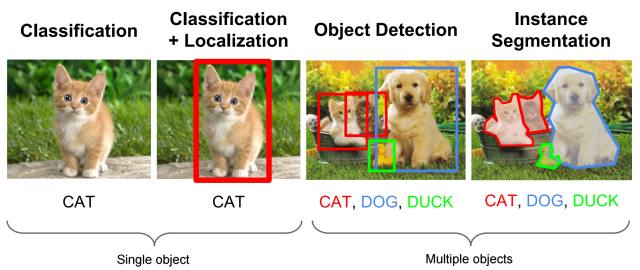

Object detection uses a bounding box to mark objects in an image. This means that an object is pointed out by having a box around it.

While it identifies the object and its location, it doesn’t capture the finer details of its shape or separate it from touching or overlapping objects.

Image segmentation tackles these limitations by providing pixel-level information. It creates a digital mask that separates the object of interest from the background and potentially other objects in the image.

Sometimes, the object is not visible, so capturing its precise shape is essential. Image segmentation offers this assistance. Unlike object detection, it colors the object to make it visible, just like in self-driving cars. Segmenting pedestrians and vehicles precisely is essential for safe navigation.

It does not matter if objects are close together or even touching; image segmentation can differentiate them more effectively than bounding boxes that might overlap or miss parts of the object.

However, not all image segmentation models are created equal. While many exist, the Segment Anything Model stands out for its versatility and user-friendliness.

We understand it was created to segment images, but what exactly is the Segment Anything Model?

More Read: Image Classification Vs Object Detection Vs Image Segmentation

Segment Anything Model Intro

Using SAM has made object identification very simple. Typically, image segmentation models require training on a specific task, like segmenting cars in images, which involves much-specialized work and data.

SAM, however, is more flexible. It can segment a wide variety of objects in an image. It is trained on an extensive data set with around 1 billion masks on 11 million images.

Meta also said they had evaluated the model performance on various tasks and found that the zero-shot performance is impressive.

Zero short performance simply means testing the model on new or unseen scenarios without additional training,

That means this segment Anything model can also identify objects that were not part of the training.

SAM’s Strengths

- Multiple Guidance Options: You can tell SAM what to segment in a few ways:

- Click on the object directly in the image.

- Click points to include and exclude areas around the object.

- Provide a bounding box around the object.

- Ambiguity Handling: It can handle uncertainty in an image. An object’s identity might generate multiple possible segmentation masks if it is ambiguous.

- Automatic Segmentation: SAM also automatically finds and segments all the objects in an image without specific guidance.

- Real-time Interaction: Once SAM has processed the image initially, it can quickly generate segmentation masks for different prompts (like clicking new objects).

How Segment Anything Model Works

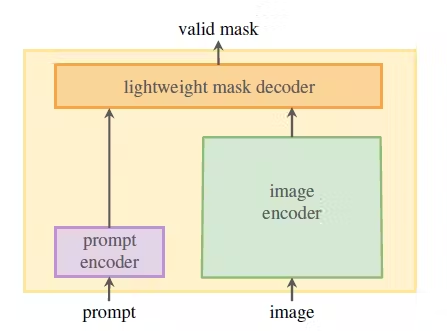

This model was created especially for image segmentation, but it also uses deep learning to carry out other functions.

It belongs to a class of neural networks known as convolutional neural networks (CNNs) and is specifically tailored for segmenting objects within images.

Here’s a simplified explanation of how the Segment Anything Model works.

- Input Image: The first thing it does is load an input image. Choose any image, regardless of size, or containing one or more objects that need to be segmented.

- Feature Extraction: The convolutional layers process the input image. These layers maintain spatial information while extracting significant features from the image.

- Segmentation: It then creates a segmentation mask for the input image using extracted features. This mask successfully separates the items from the backdrop by highlighting the areas of interest in the image.

- Training: SAM must be trained on an annotation-rich image dataset before deployment. Backpropagation is the technique by which the model adjusts its internal parameters during training so that it can learn to map input images to their appropriate segmentation masks.

- Inference: Once trained, it easily segments objects in new, unseen images. During inference, the model takes an input image and produces a segmentation mask, outlining the objects in the picture.

- Post-processing (Optional): In some cases, post-processing techniques such as smoothing or refining the segmentation mask may be applied to improve the final segmentation results.

Why Use Sam

The Segment Anything Model (SAM) offers several compelling reasons to consider it for your image segmentation needs

Versatility and User-friendliness

- Multiple Guidance Options: Unlike traditional models requiring specific training data, SAM provides flexibility in how you tell it what to segment. You can click directly on the object, select points around it, or draw a bounding box. This makes it accessible to users with varying levels of technical expertise.

- Automatic Segmentation (Optional): SAM can go beyond user-guided segmentation. It can attempt to automatically identify and segment all objects within an image, saving you time and effort, especially for tasks involving numerous objects.

Efficiency and Accuracy

- Real-time Interaction: Once SAM processes the initial image, it can generate segmentation masks for different objects very quickly. This allows for efficient workflows where multiple objects can be segmented within the same image without significant delays.

- Ambiguity Handling: SAM acknowledges the inherent complexities of images. If the object’s boundaries are unclear, it might generate several possible segmentation masks. This allows you to choose the most accurate representation for your needs.

- Deep Learning Approach: By leveraging deep learning techniques, SAM has the potential to continuously improve its segmentation accuracy over time as it’s exposed to more data.

Features of Segment Anything Model

The Segment Anything Model offers several key features, making it a powerful tool for image segmentation tasks.

- Versatility: Regardless of an object’s size, form, or orientation, SAM can segment a vast range of objects inside images. It can effectively handle complex scenes with multiple objects and background variations.

- High Accuracy: SAM relies on deep learning techniques and advanced architectures to achieve a high level of segmentation accuracy. Through extensive training on annotated datasets, it learns to identify the boundaries of objects in the image accurately.

- Semantic Understanding: SAM goes beyond basic pixel-level segmentation by offering a deeper understanding of the objects depicted in an image. It achieves this by identifying object classes and assigning semantic labels to distinguish between different regions within the image.

- Scalability: The SAM is optimized to handle large data sets and complex computational tasks effortlessly. Its architecture is specifically designed to efficiently process high-resolution images, making it well-suited for real-world applications requiring precise and rapid segmentation of large data volumes.

- Adaptability: The SAM can be easily fine-tuned to perform specific segmentation tasks. The model can accurately segment objects in a particular domain or context by training them on specialized datasets.

- Robustness to Noise and Variability: SAM is robust in real-world scenarios, tolerating noise, occlusions, and various image variations. It performs well in challenging conditions like low lighting, partial occlusions, and image artifacts, guaranteeing dependable segmentation outcomes across diverse situations.

Applications of Segment Anything Model

The Segment Anything Model (SAM) can be applied in various fields due to its ability to segment objects within images accurately. Here are some potential applications.

- Medical Imaging: Hospitals have such large datasets of images that a good image segmentation model is required to identify diseases or information in medical imaging, such as MRIs, CT scans, or X-rays. But now, with SAM, it is easy to segment organs, tumors, or anomalies from medical imaging. This component is essential for disease progression tracking, therapy planning, and diagnosis.

- Autonomous Vehicles: The high population density makes it difficult to control traffic in metropolitan areas and locate stolen vehicles. However, SAM is increasingly used in camera or LiDAR sensor images to distinguish between vehicles, bicycles, and other environmental objects. This aids autonomous vehicles, which require this information to navigate safely and make informed choices.

- Retail and E-commerce: To help with visual search, product suggestion, and inventory management on e-commerce platforms, SAM can segment products within photos. It can also be used to find anomalies in the production process or fake products.

- Security and Surveillance: SAM can help security professionals monitor and evaluate actions in public areas, airports, or vital infrastructure by segmenting people, vehicles, and suspicious items in video footage.

Conclusion

With further advancements and improvements in artificial intelligence and computer vision, SAM has the potential to revolutionize how we perceive and interact with our visual world. Its ability to accurately segment anything within an image opens up endless possibilities for innovation and problem-solving in different domains.

So, SAM is here to stay, will continue to evolve, and will significantly impact various fields.

FAQ: Segment Anything Model (SAM)

1. What is the Segment Anything Model (SAM)?

The Segment Anything Model (SAM) is an advanced AI model designed to segment objects within images. It is capable of identifying and delineating objects in a wide variety of images with high precision.

2. How does SAM work?

SAM uses deep learning algorithms, particularly convolutional neural networks (CNNs), to analyze images. It leverages a large dataset of segmented images to learn the features and boundaries of different objects, enabling it to segment new images accurately.

3. What are the main applications of SAM?

SAM can be used in numerous applications, including autonomous driving, medical imaging, robotics, video surveillance, and any other field where object detection and segmentation are crucial.

4. How is SAM different from other segmentation models?

SAM distinguishes itself by its versatility and accuracy. It is designed to segment “anything,” meaning it can handle a wide range of objects and environments without needing specialized training for each new type of object.

5. Can SAM be used for real-time image processing?

Yes, SAM can be optimized for real-time image processing applications. Its efficient architecture allows it to process images quickly, making it suitable for applications that require immediate segmentation results.

6. What are the benefits of using SAM in autonomous driving?

In autonomous driving, SAM enhances the vehicle’s ability to understand its surroundings by accurately segmenting pedestrians, other vehicles, road signs, and obstacles. This improves navigation safety and efficiency.

7. How does SAM contribute to advancements in medical imaging?

SAM can be used to segment medical images, such as MRI or CT scans, to identify and delineate anatomical structures and abnormalities. This assists radiologists and medical professionals in diagnosing and planning treatments more accurately.

8. Is SAM capable of learning from new data?

Yes, SAM can be retrained or fine-tuned with new data to improve its segmentation capabilities. This adaptability makes it a powerful tool for continuously evolving applications.

9. What kind of datasets are required to train SAM?

Training SAM requires large and diverse datasets of images with annotated segmentations. The diversity in the dataset helps SAM learn to segment a wide variety of objects and environments accurately.

10. How does SAM handle occlusions and overlapping objects?

SAM is designed to handle complex scenarios involving occlusions and overlapping objects. It uses advanced algorithms to predict and delineate the boundaries of each object, even in challenging situations.

Dawood is a digital marketing pro and AI/ML enthusiast. His blogs on Folio3 AI are a blend of marketing and tech brilliance. Dawood’s knack for making AI engaging for users sets his content apart, offering a unique and insightful take on the dynamic intersection of marketing and cutting-edge technology.